James C. Zimring

Partial Truths

How Fractions Distort Our Thinking

Counterintuitive Properties of Rates, Frequencies, Percentages, Probabilities, Risks, and Odds

Organization and Goals of This Book

Part 1: The Problem of Misperception

Chapter 1. The Fraction Problem

Using the Form of a Fraction as a Conceptual Framework

Ignoring the Denominator Is Widespread in Political Dialogue

Changing the Outcome by Kicking Out Data

Talking Past Each Other Using Different Fractions

Fractions Fractions Everywhere nor Any a Chance to Think

Chapter 2. How Our Minds Fractionate the World

The Filter of Our Sensory Organs

How Our Minds Distort the Fraction of the Things We Do Perceive

Chapter 3. Confirmation BiasHow Our Minds Evaluate Evidence Based on Preexisting Beliefs

Confirmation Bias Is Unintentional and Agnostic to Self-Benefit

Timing of Information and the Primacy Effect

Why Confirmation Bias Fits the Form of a Fraction

Two, Four, Six, Eight … Confirmation Bias Is Innate!

One, Five, Seven, Ten … Should We Interpret This Again?

Psychological Reinforcement of Confirmation

Why Did Confirmation Bias Evolve in Humans?

Chapter 4. Bias with a Cherry on TopCherry-Picking the Data

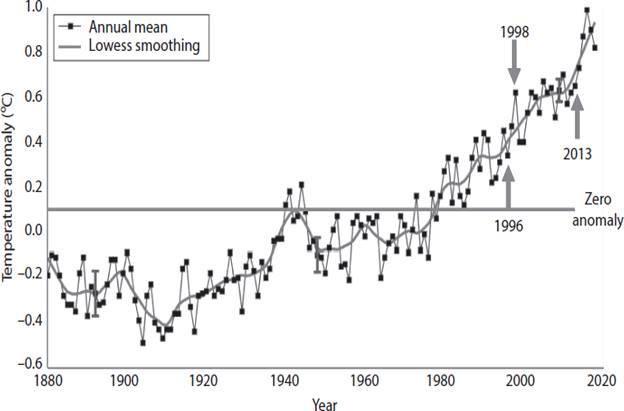

Picking Cherries to Solve Climate Change Problems

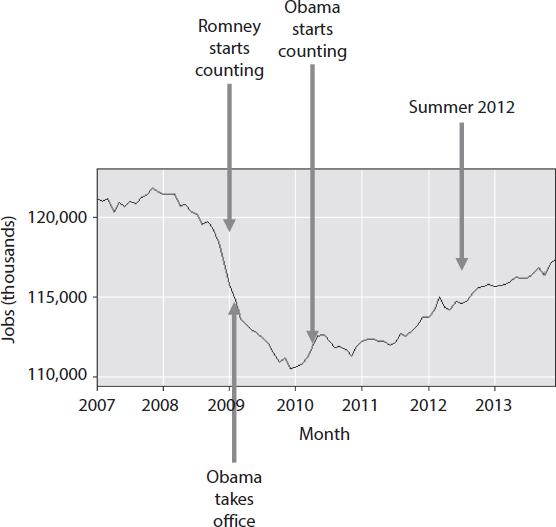

Cherry-Picking Seems Standard Practice in Politics

Part 2: The Fraction Problem in Different Arenas

Chapter 5. The Criminal Justice System

Hiding of Bias Through Categorization

Big Data and Big Data Policing: How Computer Algorithms Can Both Hide and Amplify Bias

Juries Convicting the Innocent

Quantity and Quality of the Evidence in the Context of All the Evidence

Background Beliefs, Motivations, and Agendas in the Bush Administration

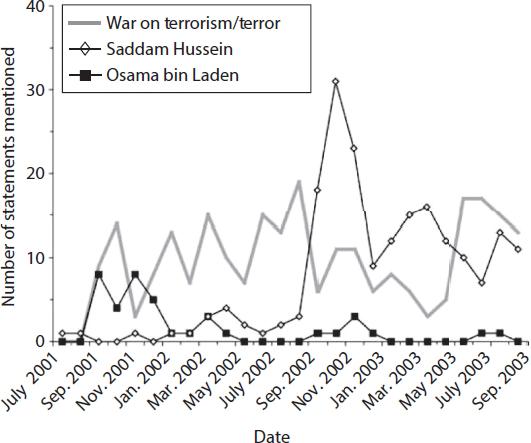

Linking Saddam Hussein to Osama bin Laden and Iraq to Al Qaeda

The Tenuous Nature of the Other Claims of Fact

Reflecting Back on the Run Up to Invasion of Iraq

Chapter 7. Patterns in the Static

Mistaking the Likely for the Seemingly Impossible: Misjudging the Numerator

Chapter 8. Alternative and New Age Beliefs

Science, Skepticism, and New Age Beliefs

A Clash of Cultures: A Clash of Views

Chapter 9. The Appearance of Design in the Natural World

Is the Self-Correcting Fraction of Evolution Circular Reasoning?

The Fine-Tuned Physical Universe

The Certainty of Impossible Accidents

You Can’t Explain Everything So There Must Be a Designer of the Universe

Statistics as a Tool to Mitigate the Problem of Chance Effects

New Variations of Misjudging the Numerator: An Era of Big Data

Confirmation Bias by Scientists and Scientific Societies

Part 3: Can We Reverse Misperception and Should We Even Try?

Chapter 11. How Misperceiving Probability Can Be Advantageous

Net Effects Determine Adaptability in Evolution

Benefits of Confirmation Bias and the Need to Suspend Disbelief in the World

Emergent Properties of Societal Cognition

The Selection Task: Peter Wason Strikes Again

Rationality of Irrationality: Intellectualist Versus Interactionist Models of Human Cognition

The Epistemic Case for Better Knowledge Through Diversity

A Dark Side to Social Networks: Manipulating Public Opinion, Public Policy, and Legislatures

Integrating Results from Epistemic Network Models and the Interactionist Model of Human Reasoning

Chapter 12. Can We Solve the Problems with Human Perception and Reasoning and Should We Even Try?

Is Critical Thinking a Skill We Can Learn or Do We Know How to Do It, but Just Don’t?

Barriers to Educating the Problems Away

Can Debiasing Makes Things Worse? The “Backfire Effect”

The Power of Mechanistic Explanations

Title Page

Partial Truths

How Fractions Distort Our Thinking

James C. Zimring

Columbia University Press

New York

Publisher Details

Columbia University Press

Publishers Since 1893

New York Chichester, West Sussex

Copyright © 2022 James C. Zimring

All rights reserved

EISBN 978-0-231-55407-7

Library of Congress Cataloging-in-Publication Data

Names: Zimring, James C., 1970- author.

Title: Partial truths : how fractions distort our thinking / James C. Zimring.

Description: New York, NY : Columbia University Press, 2022. |

Includes bibliographical references and index.

Identifiers: LCCN 2021039876 (print) | LCCN 2021039877 (ebook) |

ISBN 9780231201384 (hardback) | ISBN 9780231554077 (ebook)

Subjects: LCSH: Critical thinking. | Cognitive psychology. | Suicide victims.

Classification: LCC BF441 .Z55 2022 (print) | LCC BF441 (ebook) |

DDC 153.4/2—dc23/eng/20211104

LC record available at https://lccn.loc.gov/2021039876

LC ebook record available at https://lccn.loc.gov/2021039877

A Columbia University Press E-book.

CUP would be pleased to hear about your reading experience with this e-book at cup-ebook@columbia.edu.

Cover design by Henry Sene Yee

To Kim, Alex, and Ruby

who make my fraction whole

Acknowledgments

Starting with a blank page and ending with a published book is no small process, and it inevitably entails the contributions and encouragement of a great many people—such is certainly the case with this book. I have babbled incessantly to friends and colleagues (the colleagues also being friends) on the underlying themes and concepts in this book for years, and they all have my thanks for the patient feedback and dialog, including the suggestion of specific examples that have been incorporated into the text. In particular, I would like to state my heartfelt appreciation to Steven Spitalnik, Patrice Spitalnik, Angelo D’Alessandro, Katrina Halliday, Chance John Luckey, Krystalyn Hudson, Eldad Hod, Heather Howie, Janet Cross, Jacqueline Poston, Karolina Dziewulska, and Ariel Hay. I would like to specifically recognize Ryan D. Tweney, who kindly served as a sounding board and mentor in cognitive psychology, as well as a friend, and who very sadly passed away during the writing of this manuscript—I will ever miss the ability to pick up the phone and learn from you.

I am particularly indebted to Lee McIntyre who has kindly and graciously mentored me as a developing author, both in general and also regarding critical feedback on the thoughts and concepts in this book. I am also indebted to the academic feedback from Cailin O’Connor, Mark Edward, Karla McLaren, Carla Fowler, David Zweig, and Steven Lubet. I would also like to thank the peer-reviewers who kindly volunteered their time to review and provide critical feedback for the text—while you will ever remain anonymous to me, you have my thanks—it is a significantly stronger book as a result of your constructive criticism.

From the first draft of this book to the finished product, I have had the great fortune to receive the assistance of many capable individuals, including Al Desetta, Miranda Martin, Zachary Friedman, Robyn Massey, Leah Paulos, Marielle Poss, Noah Arlow, and Ben Kolstad. Special thanks to Jeffrey Herman for support and encouragement.

Above all others, I am grateful to my family, for their never-ending support, love, and encouragement.

Introduction

We humans are awfully fond of ourselves. From antiquity to the present day, a considerable part of Western scholarship in philosophy, psychology, biology, and theology has tried to explain how something as splendid and marvelous as humans could ever have come into being. For theologians of the Abrahamic religions, humans were created in an image no less grandiose than that of God. Philosophers as far back as antiquity have categorized humans as superior to all other animals, clearly set apart by the ability to reason. Aristotle described humans as a rational animal.[1] Rene Descartes felt that animals were like automated machines that were not able to think; only humans had the capacity to reason. Of course, such scholars knew that humans behave irrationally at times, perhaps even often, but they explained this behavior as a failure to suppress the animalistic tendencies that lurk within us. Humans were the only animals with the ability to reason, whether or not they always did so. To develop our minds and learn the discipline to give our reason control over our actions was accomplished by the study of philosophy, which was the road to a life well lived by achieving an inner harmony of the mind that lined up with the intrinsic harmony of the universe. In modern terms, whether human reason is a gift from the gods (or one God), or the result of natural selection does not matter—what seems clear is that the human ability to reason exceeds that of all other animals on Earth and sets us apart from the beasts.[2]

Contrary to the long-standing narrative of our self-proclaimed splendor, in just the past 50–60 years, the field of cognitive psychology has described an ever-increasing litany of errors that human cognition makes in observation, perception, and reasoning. Such “defects” are most prevalent for certain types of problems and in particular circumstances, but they are unequivocally present. Of course, all people do not think and act the same way. Cognition varies from person to person, and factors such as particular experience, genetic and environmental variation, and cultural context influence how a given person’s mind may work. Nevertheless, all humans have some version of a human brain in their skull, which uses a general cognitive apparatus that we all carry. When I write of human cognition, I am referring to how humans think on average—that is, not how a specific human may think, but rather how many (or most) do.

Our new understanding of human cognition is nothing short of shocking. Over and over again, in a variety of different ways, cognitive psychologists have demonstrated that (under certain specific conditions), humans consistently fail even simple tasks in reasoning and logic. Perhaps more concerning, humans have defects in seeing and understanding the world around us as it “really” is.[3] Forget that we may not be able to use the facts of the world to reason logically, we cannot even observe many of the facts to begin with. Finally, and perhaps most troubling, humans have remarkably poor insight into our own thinking, often being entirely unaware of the way we process information and the effects of different factors on these processes.

This does not mean we lack insight into our minds; we actually perceive quite a lot about how our minds work. Humans have little problem reflecting on why we have reached certain conclusions, evaluating what may have influenced us, and explaining the reasons and reasoning behind the choices we make—it is just that we often get it wrong. As error prone as humans are in observing and reasoning, we are just as bad in our mental self-assessment. Even when we are bumbling about making errors, we tend to think we are right. If nothing else, at least cognitive psychology has explained why humans have spent so much time trying to explain how splendid humans are—it is in our nature to misperceive ourselves in a favorable light.

Two fundamental questions will be considered in this book. First, how can we really understand, in depth, what kinds of errors we make and how those errors affect our internal thinking and our perception of the external world? To shed light on human thinking, this book focuses on the concept of a fraction to explore the fundamental form underlying a variety of human errors.

Second, if humans really are so cognitively flawed, if we observe incorrectly, and if we think illogically, then how can we explain how effective humans are at using reason to make tools, solve problems, develop advanced technologies, and basically take over the world?

Setting aside the debate of whether human advancements are good or bad—that is, whether we are making progress toward some laudable end or just destroying the world and each other—it is difficult to deny that humans have made massive technological progress and solved many extremely complex problems. How can such an error-prone cognition accomplish such a task? Has cognitive psychology just got it all wrong, or can we find some other explanation? These issues will be formally addressed in chapter 11, although they will be lurking in the background throughout the book. The main focus of this book, however, is to describe one particular form that underlies many errors, to analyze its properties so we can better understand it, to learn to recognize it, to investigate its manifestations in the real world, and to consider strategies to avoid such errors.

The Form of the Errors

Yogi Berra, the famed baseball player and manager who had a talent for witticism (intentional or not), once went into a restaurant and ordered a pizza. When the chef behind the counter asked him if he wanted it cut into four or six pieces, he answered, “you better cut the pizza into four pieces because I’m not hungry enough to eat six.” Berra was also quoted as saying, “Baseball is 90 percent mental; the other half is physical.” Both of these statements are funny because they violate intuitive rules regarding how fractions work.

In this book, we explore how human cognition handles problems that fit the form of a fraction, including risks, odds, probabilities, rates, percentages, and frequencies. It is hard to navigate modern life without encountering and using these concepts. Exploring how fractions work and how we understand (and misunderstand) them will help us see why many of our deep-seated intuitive thought processes, however they work neurologically, are susceptible to particular errors. It also reveals that what are errors in some settings can offer great advantages in others. Overall, understanding fractions can help us understand ourselves.

Counterintuitive Properties of Rates, Frequencies, Percentages, Probabilities, Risks, and Odds

Although simple in concept, the prosaic fraction has complex nuances. As such, we are susceptible to both innocent misunderstanding and purposeful manipulation of fractions. We wield issues like percentages and risk as common notions, and often we do so effectively. We might not notice, however, when we misunderstand them, likely because concepts such as probabilities and frequencies apply to populations. Human cognition evolved in the setting of small nomadic groups of people with basically anecdotal experiences—not in the context of analyzing sets of data that reflect populations.[4] This likely explains why humans favor anecdotal information to high-quality population-based data, even though for many purposes, the latter is profoundly more powerful than the former, if (and only if) analyzed properly.[5]

The result is that common sense is commonly mistaken, in particular when applied to types of information that we did not evolve to handle. This likely explains why erroneous thinking can “feel right” by our intuitions—we don’t recognize that we are analyzing different kinds of information. But there is good news. When humans are exposed to explanations and demonstrations that reveal they may be wrong, they have the capacity to remedy their conclusions, if not their underlying instincts. The bad news is that humans are much less adept at modifying their thinking to spot and prevent errors on their own. Even when we discover what errors can occur, and learn to recognize the circumstances in which they do so, the right answer may still “feel” incorrect. Eons of evolution are not so easily overcome.

A Few Caveats

In writing this book, an inescapable irony has been ever present in my mind. I strive to give a balanced view and consider alternative arguments, but I nevertheless am selective in the evidence I present. Book length, author bandwidth, and reader attention span are limited. In other words, in writing a book about noticing only a fraction of information in the world, I can present only a fraction of the available evidence—both for and against this concept. I am mindful that this book is written in a Western philosophical and intellectual tradition. I am strongly committed to avoiding notions of cognitive or cultural imperialism; however, I am a product of my culture and biased by my perspective. Finally, I wrote this book using a version of the human brain (although some who know me might deny that claim). So, by my own arguments, I am prone to misperceive the fraction about misperceiving the fraction, and to be quite unaware that I have done so. To the extent that the interactionist model of human reasoning is correct (as detailed in chapter 11), at least I know that my arguments will be vetted through the dispute and disapproval of others. My experience as a scientist and author makes me confident that, to the extent that anyone takes notice, there will be no shortage of disputation and criticism. In the greatest traditions of scientific practice, I welcome this intellectually, if not also emotionally.

Organization and Goals of This Book

Part 1 of the book defines and explains a series of circumstances that can lead to misunderstanding as a result of the form information or ideas take (that can be represented by fractions). I use examples from both the controlled setting of the laboratory and also the real world to describe these processes and to understand their nuances.Part 2 builds onpart 1 to further explore manifestations in the real world and in particular contexts. The areas explored range from politics to the criminal justice system, to alternative and New Age beliefs, to the argument for intelligent design of the universe, and even to the hard sciences. This wide range of areas is explored because the cognitive processes discussed are present whenever human minds think, and as such, they manifest in whatever humans think about.Part 3 explores how cognitive errors also can be highly advantageous and arguably essential to the ability of humans to figure things out. These considerations bear directly on the apparent contradiction between how error prone humans are and how successful we have been in advancing our understanding and technology. The impulse to automatically think that we should eradicate what we perceive as errors may be misguided—like many things, good or bad is seldom black and white. It is a net effect and a matter of context, and an informed strategy should take such considerations into account. The final chapter discusses what we have learned about how to mitigate or suppress these cognitive effects, if and when it is prudent to do so.

Part 1: The Problem of Misperception

Chapter 1. The Fraction Problem

In 1979, James Dallas Egbert III, a native of Ohio, was a student at Michigan State University (MSU). Egbert, who went by the name of “Dallas,” was a child prodigy, entering MSU at the precocious age of 16.[6] He was also an enthusiastic player of Dungeons and Dragons (D&D), some might say a fanatic. On August 15 of that year, he disappeared. A handwritten message was found in his dorm room that seemed like a suicide note, but no body was found, and an investigation was launched into what befell him.[7]

D&D was a widely popular role-playing game in which players engaged in a joint imaginative exercise and acted out the personas of medieval adventurers in a monster-ridden world full of magic, mystery, and combat. I describe this from personal experience, and with no small sense of nostalgia. In my youth, I was an avid D&D player, entirely engrossed in the game. Like any new trend that engulfs a generation of kids, D&D was strange and unfamiliar to their parents. It was a mysterious black box, full of violence, demons and devils, and sinister magic. In my mother’s generation, one had to fear Elvis Presley’s gyrating hips; for my generation, parents were suspicious of D&D and kept a careful eye on this odd new fad.

Investigators who were trying to find Dallas learned that he frequently played a form of D&D that involved real life role-playing in the eight miles of labyrinthian steam tunnels that lay beneath MSU. Perhaps something had gone tragically wrong in the tunnels. The media quickly latched onto the D&D angle. Newspaper headlines were nothing less than sensational:[8]

”Missing Youth Could Be on Adventure Game”

”Is Missing Student Victim of Game?”

”Intellectual Fantasy Results in Bizarre Disappearance”

”Student May Have Lost His Life to Intellectual Fantasy Game”

”Student Feared Dead in ‘Dungeon’”

An exhaustive search of the steam tunnels turned up a great many curiosities, but nothing that gave a clue as to what happened to Dallas.[9] Ultimately, James Dallas Egbert was recovered alive and in relatively good physical health, on September 12, 1979, almost a full month after his disappearance. How did the detectives finally track him down? They didn’t. Dallas called one of the detectives who was looking for him and asked to be retrieved. He was in Morgan City, Louisiana, 1,156 miles away from East Lansing, working in an oil field and living in a dilapidated apartment with some other people, whom he refused to let be identified.[10]

The James Dallas Egbert case focused nationwide attention on D&D. Shockingly, a string of suicides and homicides occurred in the early 1980s, committed by adolescents who frequently played D&D. By 1985, worries about D&D as a game that could cause psychosis, murder, and suicide had developed into a fully matured movement. There was nothing inappropriate about the concern regarding the possible association of playing D&D and suicide. Some correlations are real and may even be causal. If something is potentially dangerous, it should not be ignored, and significant evidence suggested that D&D might truly be dangerous.

Great media attention focused on the concerns that playing D&D was dangerous. Even 60 Minutes (among the most famous and accomplished U.S. news shows) ran a focused segment on the problem. Gary Gygax (one of two originators of D&D) gave an interview on the show and was asked a direct question: “If you found 12 kids in murder suicide with one connecting factor in each of them, wouldn’t you question it?” Gygax answered, “I would certainly do it in a scientific manner, and this is as unscientific as you can get.” The segment made the following statement: “There are those who are fearful that the game, in the hands of vulnerable kids, could do harm; and there is evidence that seems to support that view,” followed by a list of individuals who played D&D, their ages, and what had happened to them.

So how should one assess the damning evidence put forth? A more detailed analysis subsequently established that 28 teenagers who often played D&D had committed murder, suicide, or both. One might ask, should we just ban the game? What are we waiting for?[11] After all, 28 lives have been lost. What additional facts does one need? Actually, several additional facts are needed.

The first fact is that D&D had become so wildly popular by 1984 that an estimated three million teenagers played it. The second fact is that the general rate of suicides among all U.S. teenagers, at that time, was roughly 120 suicides per year for every one million teenagers. This means that for the three million teenagers who played D&D, one would predict an average annual suicide rate of 360, in the absence of any additional risk factors. In other words, the seemingly striking number of 28 over several years was actually 12-fold lower than the number of suicides one would expect (in the population who played D&D) in a normal year, each and every year. It thus appears that D&D may have been, if anything, therapeutic and decreased the rate of suicides.[12] After considering the number of teenagers playing D&D and the background rate of suicide, the evidence that 60 Minutes said “seems to support this view” that D&D was dangerous actually supported the opposite view.

A large number of studies has been published in scientific journals, nonscientific journals, the lay press, dissertations, and online writing on this topic (all told more than 150 works).[13] At the end of the day, no credible evidence whatsoever supports the assertion that role-playing games in general, or D&D in particular, increase any risk of dangerous behaviors, including homicide or suicide.[14]

Sadly, almost a year after he returned home, on August 11, 1980, James Dallas Egbert shot a .25-caliber bullet into his head. His life-support machines were turned off six days later.[15] We will never know for sure why Dallas killed himself. But we do know that a credible association between playing D&D and suicide cannot be made and we have no reason to suspect fantasy role-playing games hurt Dallas in any way. It is more likely that D&D actually helped him cope with his struggles. Mental illness and depression do not need an external cause to manifest in the human mind. If a cause must be posited, it is much more likely that it was associated with the societal shaming Dallas seemed to have experienced as a result of being nonheterosexual. Unlike playing D&D, such shaming is well known to actually correlate with an increased risk of suicide.[16]

Given this explanation, the error in misinterpretation is probably pretty clear. But how can we formally describe it? If we can identify a general form of the error that was made, we can remember that form, and be on the lookout for it in other situations. One such instrument to describe this form is the concept of a fraction.

Using the Form of a Fraction as a Conceptual Framework

For many of you, terms like “numerator” and “denominator” may resurrect long-buried memories of the tyranny of a middle-school mathematics teacher or the regrettable trauma of muddling through long story problems trying to figure out percentages. Problems like this:

Johnny bought $6.00 of fruit and Sally bought $9.00 of fruit. Johnny only bought apples (which were $2.00 apiece) and Sally only bought oranges (which were $1.00 apiece). What percent of the pieces of fruit were apples?[17]

Don’t panic. You are likely no longer judged on such abilities, and the rest of this book makes no further mention of story problems. The use of simple mathematical concepts is all we need.

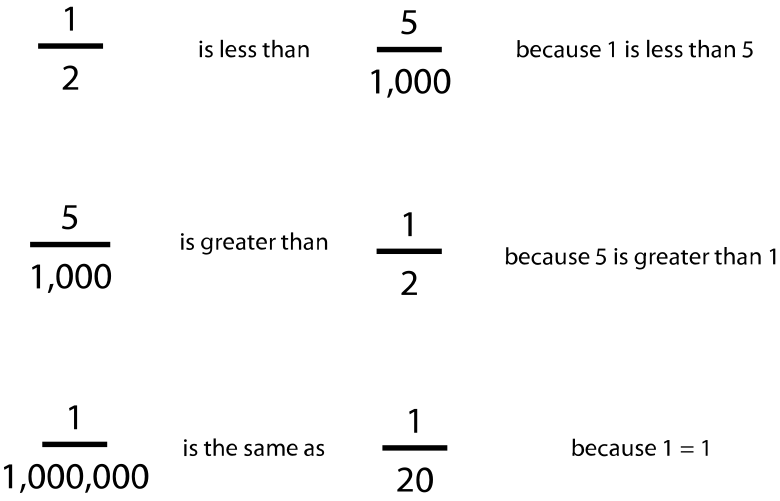

In the definition of a fraction that we will work with, the number on the top is the numerator, which tells you how many of a thing have a certain property. The number on the bottom is the denominator, which tells you how many total things there are.[18] Consider a simple fraction like 1/2, which is just the mathematical representation of the common notion “one-half.” In this case, the numerator is the top of the fraction, “1,” and the denominator is the bottom of the fraction, “2” ( figure 1.1). We can see that a fraction indicates how many of a total population (the denominator) has a particular property (the numerator).

1.1 The simple fraction 1/2.

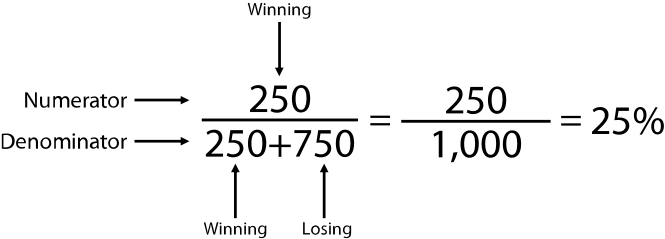

If 1,000 scratch-off lottery tickets are on display in a gas station, then the fraction of winning tickets is the number of winning tickets (i.e., the numerator) over the total number of tickets (i.e., the denominator), or 250/1,000—in other words, 1/4 (i.e., one out of every four, or one-quarter). Importantly, the denominator includes the numerator. In other words, the numerator is just the 250 winning tickets, whereas the denominator is the 250 winning tickets and the 750 losing tickets, to include all 1,000 tickets ( figure 1.2).[19]

1.2 An example of the numerator and denominator, using lottery tickets.

Many common concepts that humans consider and discuss every day, such as rates, frequencies, percentages, probabilities, risks, and odds, can be expressed using fractions. I am talking about these terms as they are commonly used in English, and not their precise mathematical definitions, which have some subtle (but important) differences. We are concerned with the common use of the words and the concepts to which they are attached.[20]

For example, the common saying that an event is “one in a million,” although often not used literally, nevertheless can be captured in mathematical language by the fraction 1/1,000,000. When the New York Times reports that “One in seven people in the United States is expected to develop a substance use disorder at some point,”[21] it is the same as saying that substance use disorders occur at a rate of one out of seven people, with a frequency of 1/7, and that 14.3 percent of people will have a substance use problem, that there is a probability of 0.14, and that the risk is 1 in 7 or the odds are 1 to 7.[22] Thus, many common terms and concepts we use in everyday life can be represented in the form of a fraction.

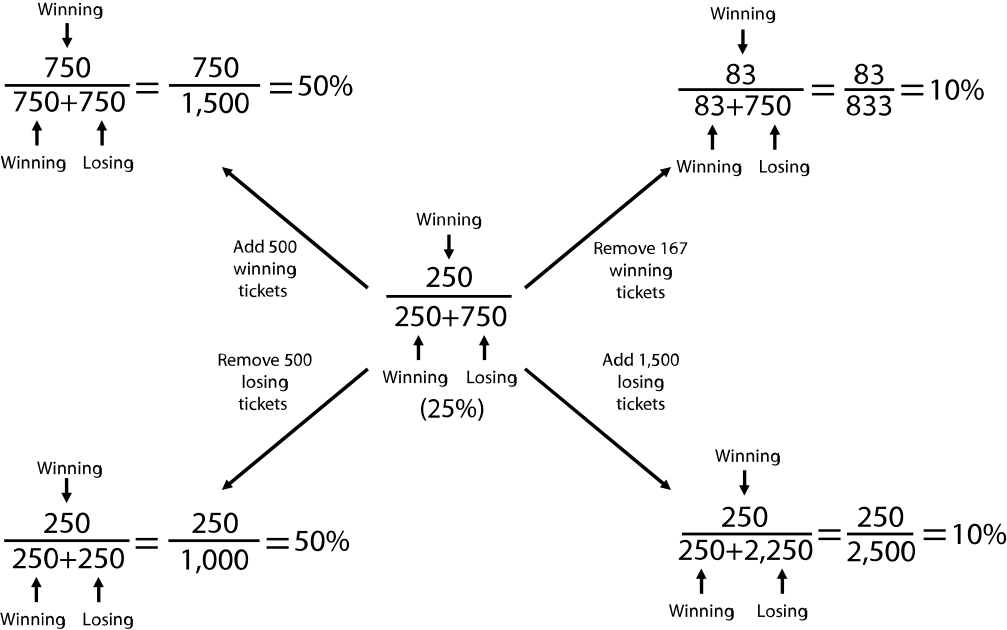

Fractions have particular properties. The numerical value of a fraction can go up or down according to different mechanisms ( figure 1.3). An increase in the numerator or a decrease in the denominator both make the value of a fraction go up—one can increase the odds of getting a winning lottery ticket from a display case, to the same extent, by either adding more winning tickets or removing some losing tickets. If we start with 250/1,000 winning tickets, then 25 percent of the tickets are winning (1 in 4 odds or probability of 0.25). If we add 500 winning tickets, the fraction is now 750/1,500, or 50 percent of tickets are winning (1 in 2 odds or probability of 0.50). Alternatively, starting with 250/1,000 tickets, we can subtract 500 losing tickets. The fraction is now 250/500, or 50 percent of tickets are winning (1 in 2 odds or probability of 0.50). Both approaches increase the value of the fraction by same amount.

1.3 The odds of getting a winning lottery ticket as affected by different changes to the numerator and denominator.

Conversely, a decrease in the numerator or an increase in the denominator both make the value of a fraction go down—one can decrease the odds of getting a winning ticket from a display case by removing winning tickets or by adding more losing tickets. If we start with 250/1,000 winning tickets, then 25 percent of the tickets are winning (1 in 4 odds or probability of 0.25). If we take away 167 winning tickets, then the fraction goes down to 83/833 and 10 percent of the tickets are winning (1 in 10 odds or probability of 0.10). Alternatively, we can leave the number of winning tickets the same (250) but add 1,500 losing tickets. Then, the fraction goes down to 250/2,500, or 10 percent of the tickets are winning (1 in 10 odds or probability of 0.10).

The depth to which the simple properties of fractions are intuitively misunderstood by people runs deep. A fairly humorous, albeit embarrassing example of this was discovered in the 1980s with regards to a great American standard—the fast-food hamburger. McDonald’s quarter-pounder (i.e., 1/4 pounder) had been a dominant force in the hamburger market since it was introduced in 1971. In an effort to knock the 1/4 pounder off its pedestal, A&W introduced a burger that was favored by consumers in blinded taste tests and cost less than the 1/4 pounder. Even better, the A&W burger was a larger quantity of meat, coming in at one-third of a pound (1/3 pound). Sadly, for A&W, their new 1/3-pound hamburger was a flop. Once it was clear that it was failing despite its many virtues, analysis of customer focus groups demonstrated why people were not purchasing it. Consumers thought 1/3 pound of meat was less than 1/4 pound, because 3 is less than 4.[23]

Common language we use to describe changes in fractions can also lead to counterintuitive results. For instance, consider if a stock has a value of $1,000/share. Then say that its value goes down by 50 percent but later goes up by 50 percent. To many people, it seems that the stock should now be valued at $1,000/share, after all, it went down and then up again by the same amount. Actually, it did not. Instead, it went down and then up by the same percentage. When it went down by 50 percent, the value was $500/share. When it then went up by 50 percent, the value was now $750/share. The percentage change is a function of the value that is changing.

Alternatively, consider the meaning of the percentage increase in the number of people afflicted by certain diseases. According to the Centers for Disease Control and Prevention (CDC), there are (on average) seven cases of plague each year in the United States.[24] In contrast, more than 800,000 people die from vascular disease in the United States each year (e.g., heart attack or stroke). So, someone might tell you that the number of plague cases and deaths from vascular disease both increased by 300 percent. In the case of plague that would equate to only twenty-one more cases, but in the case of vascular disease, it would equate to 2.4 million more deaths. Even though both values went up by 300 percent, the numerical magnitude of the change between the two diseases is not even close.

Ignoring the Denominator

How can we utilize the concept of a fraction to formally explain what happened in the case of teenage suicides and playing D&D? The likelihood of someone who plays D&D committing murder or suicide is a simple fraction. Those who both play D&D and also commit murder or suicide are on the top of the fraction (numerator) and the total number of people who play D&D are on the bottom (denominator). The fraction would appear like this:

People who play D&D and commit murder or suicide / Everyone who plays D&D

The media and those concerned were focusing on the numerator but did not take the denominator into account.

Why is ignoring the denominator such a problem? If I focus on only the numerator and don’t consider the denominator, then each of the statements shown in figure 1.4 seem to be correct. When considering the effect of the denominator, however, each of these statements is clearly wrong. Calculation of risk takes the form of a fraction, and as such, unless one accurately considers both the top and bottom of the fraction, then one cannot determine the risk. Yet, when faced with a number of events (in this case, children who played D&D and committed murder or suicide, the numerator), people often jump to a risk assessment without considering the total number (in this case, the number of kids playing D&D, the denominator).[25]

1.4 Clearly incorrect statements that appear to be true if the denominator is ignored.

Focusing on the numerator alone is not always an error; sometimes, the denominator is not relevant, and its inclusion could lead to an incorrect result. Sometimes, we simply need to know a numerical value and are not concerned about the rate, frequency, percentage, probability, risk, or odds. For example, I might be a healthcare provider who is trying to adequately stock my pharmacy with medication for heart disease. What I really need to know is how many people in my community have heart disease. For these purposes, it is irrelevant to me what percentage of the population has heart disease. I have no particular need of the denominator (the total number of people in my community). I only need to know the numerator (the absolute number of people with heart disease regardless of how many people are in the community).

Whenever an issue of rate, frequency, percentage, probability, risk, or odds is important, then both the numerator and denominator are needed. To focus on one to the exclusion of the other can lead to error. An epidemiologist who was trying to figure out the rates of heart disease between two different cities could make little use of the numerator alone. Knowing that there were 10,000 cases last year in each city is not useful without knowing how many people live in each city.[26] With just the numerator (10,000), it looks like the rate of heart disease between cities might be the same—after all, both had the same number of cases. This determination changes drastically, however, if one learns that the first city is a booming metropolis like New York (with approximately eight million inhabitants), whereas the second city is a small Midwestern town with twenty thousand inhabitants.[27] When assessing frequency or risk, neither the numerator nor the denominator alone is sufficient; both are needed for either one to be meaningful.

None of this is profound. Humans intuitively recognize instances like these. If someone said to you that the risk of heart disease is the same in the large city and the small Midwestern town because they have the name number of cases, it would be pretty clear that the statement is confusing risk of disease with number of people afflicted. In many cases, however, human cognition does not make the leap to ask what the size of the denominator is or even to consider that there is one. This tendency is often subconscious, and people remain unaware that they are not taking the whole fraction into account.

Regrettably, publication of data-driven analysis is not compelling to many people, who prefer their intuitive interpretations of the world. Part of this may be intrigue. In the artful words of Jon Peterson with regards to the D&D issue, “The myth of the game that drove college kids insane was simply more powerful than the dull reality that so much hype and furor derived from a private investigator’s misguided hunch.”[28] However, it seems to go much deeper than that. Humans have the persistent tendency to notice only numerators. Recently, the New York Times wrote a follow-up piece about the panic surrounding D&D in historical terms.[29] As the article points out, the error is consistent, ongoing, and in no way restricted to D&D: “Today, parental anxieties have turned to videos, notably those dripping with gore. Can it be mere coincidence, some ask, that the mass killers in Colorado at Columbine High School and the Aurora movie theater, and at the grade school in Newtown Conn., all played violent electronic games?”

The shootings at Columbine High School, Sandy Hook Elementary School, and the seemingly endless stream of mass shootings, before and since, are horrific events of profound tragedy. We should be vigilant in seeking causes of violent behavior and doing everything we can to prevent them. The important (and tragic) point is that seeking such causes cannot stop with finding a few things the assailants had in common and then trying to ban these things without more detailed analysis that includes the whole picture (i.e., the denominator). In other words, like the D&D case, is the rate of violent acts among adolescents playing violent electronic games higher than it is in all adolescents in general? If so, is it a causal factor?[30] As is good and appropriate, research into this issue is ongoing.

Indeed, an association between violent video games and aggressive behavior has been reported in multiple studies,[31] even though this association may be due to what is called publication bias (a particular manifestation of the type of error we are exploring in this book that is discussed in detail in chapter 10).[32] Thankfully, scientists continue to study this question using methods that control for such confounders. Failing to assess the denominator not only risks our focusing energy on the vilification of benign, or even beneficial things, but also distracts and misdirects our attention from what the real causal problems may be.

Ignoring the Denominator Is Widespread in Political Dialogue

Politicians frequently ignore or purposely obscure the denominator to manipulate political facts. Consider a common type of claim made by politicians and political parties, pretty much every economic quarter and each electoral cycle. Claims like “we made the biggest tax cut in history,” or “we created more jobs than anyone in history,” or “we had the largest economic growth in history,” or “under my opponent, the economy lost more value than in any recession in the history of the country.” The arguments would not be made, and repeated over and over, if they weren’t convincing. But many times, these claims are simply pointing to the dollar amount or the number of jobs without considering the size of the economy or work force, that is, they focus on the numerator and ignore the denominator.

Consider that if today the Dow Jones drops by 182 points, it is a slightly down day, but it is really no big deal. The famous stock market crash of 1929, however, was a drop of this amount and it was disastrous. The Dow Jones at its peak was around 381 in the year 1929 before the crash, whereas it was over 30,000 in the year 2021. So, a $182 drop in 1929 was a loss of 48 percent; the same drop in 2021 is less than 1 percent. It would be correct to say that the stock market had larger decreases under presidents Carter, Reagan, Bush, Clinton, Bush, Obama, and Trump than it ever did under Herbert Hoover as our country slid into the Great Depression if, that is, you consider just the numerator (the dollar change in the market) and not the denominator (the value of the market).

Consider the U.S. Presidential campaign of 2016. Then-candidate Donald Trump repeatedly argued that we need to close our borders to immigrants and aggressively deport undocumented immigrants. The argument put forth by Donald Trump was the need to protect citizens from criminal acts by immigrants. He repeatedly referred to the murder of a U.S. citizen named Kathryn Steinle by an undocumented immigrant named Juan Francisco López-Sánchez. The argument was simple. Kathryn Steinle was killed by an undocumented immigrant; therefore, undocumented immigrants are dangerous. So, getting rid of undocumented immigrants will remove dangerous people and thus protect citizens by decreasing murder.

Implicit in Donald Trump’s rhetoric is the idea that immigrants are more dangerous than U.S. citizens. This is easy to infer, given Trump’s own words: “When Mexico sends its people, they’re not sending their best … They’re bringing drugs. They’re bringing crime. They’re rapists. And some, I assume, are good people.”[33]

Certainly, if Mexico actually were selectively sending criminals to the United States, then this would be a serious concern. To properly understand the situation, however, we need more information. We have to know the top of the fraction (i.e., how many murders are carried out by immigrants each year, the numerator) as well as the bottom of the fraction (i.e., how many total immigrants are in the United States, the denominator). Then, to assess relative risk, we must compare this to another fraction (i.e., the same fraction applied to nonimmigrants), in particular, the following: number of murders carried out by nonimmigrant citizens/number of total nonimmigrant citizens. The outcome of this analysis is really quite clear.

Numerous studies have shown that crime rates among immigrants occur at a much lower frequency than nonimmigrants.[34] In other words, after taking both the numerators and the denominators into account, citizens born in the United States are more likely to commit violent crimes than immigrants are. The same finding holds true even if one focuses solely on undocumented immigrants.[35] If this is correct, we would have a lower rate of crime (often called per capita crime) with more immigrants. Indeed, as immigration increased in the 1990s, the United States experienced a steady decline in per capita crime during that decade. Of course, we cannot distinguish between simple correlation and causation, but we cannot ignore the data either. The data are what the data are, and they certainly show what we could expect if immigrants are less dangerous than natural-born citizens. The observed decrease in crime along with increased immigration is so significant that it is relatively immune to statistical manipulation. As Alex Nowrasteh, an analyst at the Cato Institute, explains, “There’s no way I can mess with the numbers to get a different conclusion.”[36]

Of course, we could be concerned that this argument has altered the fraction along the way. Although much anti-immigration rhetoric speaks against immigration in general, Trump’s claims seemed to be focused on immigrants from Mexico. Perhaps immigrants from Mexico really are more likely to be criminals, but immigrants from other parts of the world are so law abiding that they offset the effects. This is not the case, however, and the same trends are observed when one limits analysis to immigrants from Mexico alone.[37] Notably, subsequent to the presidential election of 2016, Juan Francisco López-Sánchez was tried and acquitted of all murder and manslaughter charges by a jury who found that the shooting was an unintentional and horrible accident. Juan Francisco López-Sánchez was convicted of having a firearm as a felon.

The claim is not that immigrants do not commit crimes. Clearly, many crimes are carried out by individuals who have immigrated to the United States. However, it does not follow that because some immigrants carry out crimes, therefore crimes are more likely to be carried out by immigrants. To justify this conclusion, we would need to observe a greater rate of crime by immigrants compared with crimes by nonimmigrants, and this is simply not the case. Many politicians do not make this comparison, however, either because they do not know that they should or because taking such factors into account does not support their agenda. Clearly, Ms. Steinle’s murder was a horrible thing. But the greatest way to pay respect to Ms. Steinle would come from strategies that target the actual causes of violent crime.

Changing the Outcome by Kicking Out Data

In 1574, in the Scottish Village of Loch Ficseanail, a man named Duncan MacLeod was drinking with his close friend Hamish. Duncan made the following claim to Hamish: “All true Scotsmen can hold their liquor without fail!” About this time, a tall handsome man with a red beard and a plaid kilt walked into the bar and ordered a glass of scotch. By the time he had finished his drink, he was slurring his speech, seemed disoriented, and proceeded to fall off his barstool onto the ground, where he lay unconscious. Hamish looked at Duncan curiously.

”Well,” Hamish said, “I guess you were wrong when you claimed that all true Scotsmen can hold their liquor.”

”I was not wrong,” bellowed Duncan, “for you see, this fellow lying on the floor is clearly no true Scotsman.”

This is a version of the classic story that gives rise to the no true Scotsman fallacy. The initial claim made by Duncan is no less a fraction or percentage than the earlier examples. Basically, it is saying that 100 percent of True Scotsmen can hold their liquor. One can guarantee that the statement is always true by selectively kicking Scotsmen who can’t hold their liquor out of the fraction (i.e., removing them from the club of true Scotsmen). This story is crafted to make the fallacy clear, and it is easy to dismiss this as a quaint tale that makes a good joke.

In the real world, people engage in this kind of thinking all the time. Sometimes it is obvious, such as Donald Trump’s statement during the 2016 election: “I will totally accept the results of this great and historic presidential election—if I win.”[38] In other words, the only election that is a legitimate election is an election I win; therefore, I will win 100 percent of legitimate elections. Indeed, President Trump really was dedicated to this notion, accounting for his relentless efforts to challenge the legitimacy of the 2020 election. In his view, the strongest evidence that the election must have been rigged is the outcome. Trump did not win, so therefore it is not a legitimate election (if a True Scotsman can’t hold their liquor, then they aren’t a True Scotsman). Although clear in this case, the same type of error often manifests in subtler ways.

We constantly hear about the unemployment rate in America—it is one of the key economic indicators that is used to assess the health of our economy. Because this is a rate, it therefore fits the form of a fraction. At first glance this does not seem to be complicated: the unemployment rate should be a fraction with the number of people who don’t have jobs on the top (numerator) and the total number of people on the bottom (denominator). But the fraction cannot actually include all people who don’t have jobs, because that would include children too young to work, people unable to work (due to disability or illness), and people who do not want to work (e.g., retired people or those who choose not to seek employment). The unemployment rate would be immense, and the figure would not be meaningful. This is the opposite of the no-true-Scotsman fallacy, as individuals who do not qualify for the fraction are being included erroneously. It would be like bragging that the rate of prostate cancer in America could never rise to more than 50 percent if you include all Americans in your calculations (i.e., both people who were born with prostates and those who weren’t).

The real issue here is what constitutes an unemployed person? The standard definition of an unemployed person is someone who does not have a job, is able to work, and is looking for employment (i.e., has applied for a job in the past four weeks). This means that people who want a job and are seeking one (but not in the past four weeks) are neither employed nor unemployed—they are kicked out of the fraction just like the drunken Scotsman. People who have stopped seeking employment are not counted at all. This means that if the job market gets bad enough that people who want to work grow discouraged and stop applying for a few weeks, then the unemployment rate will drop even though the number of employed people has not changed. The point is that an economy that is adding many new jobs and an economy in which jobs are so hard to find that people seeking work give up for a few weeks both result in a drop in unemployment rates.

When we are given a term like unemployment rate, we are provided with a single number, like 8 percent. Understanding the fraction behind this number and knowing how the numerator and denominator are defined is essential to interpreting the single numbers we are given (e.g., unemployment rate). Regrettably, politicians and others with particular agendas purposefully exploit this type of fraction to manipulate opinion and promote misunderstanding that favors their priorities. We seldom are given the specific particulars of fractions (such as unemployment rate), which remain invisible to us unless we seek them out. We are given only the single number that comes out of the calculation.

The Special Case of Averages

On May 8, 2020, as the shutdown in response to COVID-19 was making its effect felt on the economy, the U.S. Labor Department reported an amazingly strong growth in wages.[39] Did companies suddenly realize how much they valued their workers and how tough times were and thus increase wages? No, actually, wages didn’t change at all—at least not for most individuals. Government statistics are often an average that is given to assess how a group of people is performing. The average is calculated by adding the values of each member of a group and then dividing by the number of people in the group. This is a type of fraction. An important distinction adds special properties to an average not found in the other fractions we have discussed thus far. An average is not a fraction in which we are counting the number of things with a certain property (in the numerator) over the total number of things (in the denominator). Rather, the numerator is the sum of quantitative values attributed to each thing, such as income. Consider the following:

Three people each earned a salary. The three salaries were 2, 4, and 6. What is the average salary?

(2 + 4 + 6)/3 = 4

Note that there are different ways to get to the same average. For example, if all three people made a salary of 4, then the average also would be 4:

(4 + 4 + 4)/3 = 4

Or if the salaries were 1, 1, and 10, the average would likewise be 4.

(1 + 1 + 10)/3 = 4

So, although the average is an important measure, it does not tell you anything about how the numbers are distributed. This is where the field of statistics steps in to consider the properties of the population under analysis.

How does an understanding of averages help us make sense of the U.S. Labor Department report? Because calculations of average salary apply only to those who actually are making a salary, people who lost their jobs are removed from the calculation (in other words, they are kicked out of the fraction). Job loss was highest among those making the least, so the average salaries (of those remaining) was higher, but not because anyone’s wage had gone up. Instead, lower wage earners were removed from the fraction. As reported by the Washington Post, “So, nobody’s actually earning more. It’s just that many of the lowest earners are now earning nothing.”[40]

Not appreciating all of the properties of the fraction behind a number, and the rules by which it is modified, leads to claims, perceptions, and beliefs that do not reflect reality. This is the power of fractions to deceive.

Talking Past Each Other Using Different Fractions

On Tuesday, July 28, 2020, Donald Trump was interviewed by journalist Jonathan Swan on the HBO show Axios. The interview caused quite an uproar, with opponents of Trump claiming it pointed to his incompetence.[41] The specific exchange that caught everyone’s attention was an argument on whether the United States was doing better or worse than other countries in its response to the COVID-19 pandemic. Listening carefully to the discussion, it becomes clear that President Trump and Jonathan Swan were really arguing about which fraction should be used to figure out what was actually happening with the pandemic.

President Trump acknowledged that the United States had more cases than any other country in the world, but he claimed that this was misleading, because the United States also was testing many more people; hence, of course, more cases would be identified. President Trump was absolutely correct in this regard. It is true that if two countries each had the same rate of infection (cases/number of people) and one country tested 10 times more people than the other country, then the country that tested more would detect 10 times more cases. This analysis, however, focuses on the numerator and ignores the denominator. Instead, if we divide the number of cases by the number of people tested, then the two countries would have identical rates of infection.[42] This is the power of fractions: they allow interpretation of what the number of cases really mean in the context of the population. So, in this regard, Trump could have been absolutely correct when he stated that “[b]ecause we are so much better at testing than any other country in the world, we show more cases.”

Although more testing will lead to reporting a greater number of cases, this does not mean that reporting a greater number of cases can only be due to more testing. Many different things can lead to more cases, including an increased rate of infection. We can easily determine the actual cause of increased numbers of cases with the use of the right fraction. If Trump’s claim was correct, then the fraction (positive tests/total number people tested) should not be higher in the United States than elsewhere. Regrettably for the United States, this was not the case. On July 27, 2020, the day before the Axios interview, the United States had the third highest percentage of positive tests of nations being monitored, with an 8.4 percent positivity rate compared with countries doing much better, including the United Kingdom (0.5 percent) and South Korea (0.7 percent).[43] In light of this fact, to say that the United States had more cases only because it was testing more people is not supportable. Regrettably, Trump’s claims do not hold up to the available data and are a distortion of the truth.

To be fair, however, there are many ways to define the numerator and denominator of a fraction that can alter its properties. Countries might be using different testing methodologies that have a range of sensitivities and specificities for the virus, which would alter the numerator. Regarding the denominator, two countries could have identical rates of infection with one country performing tests only on people who have symptoms and the other country broadly testing asymptomatic people who have had contact with infected people, or even as part of random testing. In other words, the actual fraction being measured in country 1 is (positive tests/sick people tested) and in country 2 it is (positive tests/sick and nonsick people tested). Country 1 would report a higher rate of infection than country 2 (even if the overall rate of infection was identical). Because country 1 is selecting the population that is being tested (using symptoms as a screening tool), the incidence of positive tests will be higher. This is a case of a faulty comparison in which it appears the formula for fractions are the same, but they actually are different. It’s an easy mistake to make. The label “positive test rate” describes both fractions, even though the fractions are fundamentally different based on who is included in the fraction.

This same problem can occur within a single nation over time. For example, early in the pandemic resources for testing were limited, and only sick people were being tested. Later, as testing resources became more widespread, contacts and random people were also being tested. Thus, the frequency of positive cases would drop, making it look as though the epidemic was getting better even though it wasn’t. This could produce such a large drop as to make it look like the epidemic was improving even while the epidemic was worsening.

To avoid these types of errors, Jonathan Swan focused not on the number of positive tests that were being reported, but rather the death rate (number of deaths from COVID-19/total population of the country). Why would this make a difference? First, there is no ambiguity in the numerator—dead is dead (although one could argue about the actual cause of death, and some people did). Second, the denominator is the total population of the country, which is a fixed value. Jonathan Swan informed Trump how poorly the United States was doing in the number of deaths per total population compared with other nations and explained how this figure was increasing in the United States. If Trump was correct that increases in reported cases did not reflect higher rates of infection, but were only due to more testing (i.e., the epidemic wasn’t getting worse), then deaths would not increase.

Jonathan Swan: “The figure I look at is Death, and death is going up now … it’s 1,000 cases per day.”[44]

Trump: “Take a look at some of these charts … we’re gonna look … Right here, the United States is lowest in numerous categories, we’re lower than the world, we’re lower than Europe…. right here, here’s Case Death.”

Swan: “Oh you’re doing death as a proportion of cases I’m talking about death as a proportion of population; that’s where the U.S. is really bad, much worse than South Korea, Germany, etc.”

Trump: “You can’t do that; you have to go by the cases.”

In this instance, both Trump and Swan were saying correct things. Trump was correct that compared with other countries, the United States had a low number (death/diagnosed case of COVID-19) and Swan was also correct that the United States had a high number (death from COVID-19/population). Trump was using one fraction, and Swan was using another. They disagreed on which was the correct fraction to use as well as what the respective fractions indicated. Swan’s fraction indicated that if you lived in the United States you were more likely to die from COVID-19 than if you lived in another country. In contrast, Trump’s fraction indicated that once you were diagnosed with COVID-19 in the United States, you were less likely to die than in other countries. Trump’s fraction was important regarding issues of quality of patient care but had no relevance to how many people were getting infected or how well the United States was handling public health measures to limit the spread of COVID-19.

It is unclear if Trump really believed that his fraction was the correct analysis or if he understood it all too well and was just grasping at straws to find any metric where the United States was doing better than other nations. It seems clear, however, that his conclusions were simply incorrect, and not only because of the previous arguments. The actual number of people who die from COVID-19 is what it is, regardless of whether or not we detect infection. Our testing affects if we count a death as being caused by COVID-19, but in and of itself, it does not change whether the person dies. As such, it is meaningful to consider that the overall number of Americans that died in 2020 was considerably higher than what was predicted based upon annual averages (since 2013), and the timing of increased deaths lined up with the timing of COVID-19 infections.[45] If it looks like we have more deaths only because we are doing more testing, then why did the absolute number of people dying dramatically increase?

Maneuvers to adjust fractions or use the incorrect fractions to argue an agenda-driven point are by no means particular to Trump. This is common among politicians of any party. Although this example focuses on the United States, because the narrative is so clear, politicians in essentially all nations utilize such ploys, from autocratic monarchies to representative republics. That it is common makes it no less misleading or manipulative. This example illustrates how the issue of what fractions you choose, and how you are using them, are baked into the real-time events that unfold in front of us every day. This case also demonstrates why we need a firm concept of fractions, how they work, and what they mean to untwist whether claims are in line with reality.

Fractions Fractions Everywhere nor Any a Chance to Think

Once you develop the habit of looking for fractions, you can start to see them everywhere—and you can start to see where fractions are not being used when they should be. A great example of this is in self-help systems, for both people and corporations. Consider how often you have seen a book or seminar entitled something like “The Five Habits of Self-Made Millionaires” or “Strategies of Highly Successful and Disruptive Startup Companies.” Actually, the list seems like an endless conveyor belt of the same types of claims, over and over again, ad nauseam. The statement “The Five Habits of Self-Made Millionaires” implies that doing these five things contributed to the ability of others to make lots of money, and if you do the same five things, you likely will have the same success. This sounds reasonable. Something is tragically missing, however. You guessed it: we are ignoring a vital component of the fraction at play.

In any given system for success, from personal habits to corporate culture, let’s just grant that the success stories are real and were caused by some strategy (i.e., not just dumb luck). The analysis cannot simply consist of noticing the characteristics of the people or corporations and emulating them. Why not? It would be an inevitably true statement that the following are traits of the richest 10 people in the United States: (1) breathing, (2) eating, (3) blinking, (4) urinating, (5) defecating, and (6) yawning.[46] The characteristics that are usually described in the self-help programs we are discussing are more reasonably associated with success than are breathing and eating, but that does not resolve the issue we are discussing. The issue is not what characteristics are found in successful people; the issue is what characteristics are found in successful people and not in less successful people. If an activity increases the likelihood of success, then it should be present at a higher rate in successful people and corporations. When you are seeking a rate, then what you are considering is in the form of a fraction.

The next time you encounter a success system like we are describing, see whether there is an assessment of how often the habits or traits being described are present in people or corporations who are not successful, or even in those who are clearly failures. If you are attending a seminar on this system, ask the question: “where is the data on how frequently these traits are found in the general population and is it higher or lower than highly successful people?” Even better, do not accept a single anecdotal example as the evidence, but rather ask for the data on groups of people. In my experience, you won’t get a good answer, if you get an answer at all. (Sadly, you won’t get your money back either, even if you ask very nicely.) In most cases, the real secret to success is coming up with a system that promises to teach the secrets of success and then selling books and running seminars.

Summary

In this chapter, I introduced fractions and showed how their properties affect descriptions of real-life situations and interpretations of claims of fact. These examples illustrated how not considering that a fraction is at play, not taking the whole fraction into account, or not using the correct fraction can lead to confusion, miscommunication, and—in some cases—manipulation. Learning to recognize when fractions are at play is essential. Fractions are almost inevitably involved when discussing rates, frequencies, percentages, probabilities, averages, risks, and odds.

Once we learn to look for them, fractions are everywhere, although not always obvious on the surface. Having recognized that a fraction is involved, understanding the nature of the fraction and the rules by which the numerator and denominator are defined (and manipulated) is essential to understanding what the information really means. Learning to identify the nuances of how fractions are defined and manipulated to generate claims of fact can change the way we understand the world.

Most of the examples in this chapter have focused on circumstances external to ourselves. Applying the concept of a fraction is not limited to the external world, but rather it extends to understanding what happens inside our minds. This will be the focus of the next chapter.

Chapter 2. How Our Minds Fractionate the World

Humans tend to perceive a rich and complex world all around them. Our minds, however, distort the frequency of the things we encounter, not only by what we can sense but also by what we notice and remember. Our intuitions of the frequency of things are often a far cry from what occurs in the external world. We do not need other's manipulation for this to happen, we do it to ourselves, as a fundamental property of human perception and cognition—and worst of all, we are often entirely unaware that we do it.[47]

The Filter of Our Sensory Organs

Our range of hearing is from 20 Hz to 20 kHz. The world is full of sound waves outside this range, but we are not capable of hearing them. Our eyes can see light in the range of 380 to 740 nanometers, but we are oblivious to infrared or ultraviolet light outside this range. Microscopes reveal a world of details, creatures, and object too small for our unaided eyes to see. Our olfaction and taste can detect the presence of certain chemical entities, while having no ability whatsoever to detect others. Our tactile system can detect wrinkles down to 10 nanometers in length, but no smaller. Smaller wrinkles are there, but to human fingers, they feel seamless and smooth. The world is full of sounds and light and chemicals and textures that fall outside our perceptual limits. They are not available to us, and as a result, they do not affect how we experience the world.

What about the information that our senses can detect: How much of it do we notice? People often are surprised to learn how oblivious we are to much of the input coming into our senses. We sample miniscule bits of the available information and infer the rest of the world, even though it is right in front of us and all around us.[48] One example is found in a fascinating body of work about how people read. In 1975, George W. McConkie and Keith Rayner attempted to ask a seemingly simple question:[49] How much can a person read within one visual gaze—that is, within a “single fixation”? To answer this question, McConkie and Rayner devised a rather ingenious experiment.

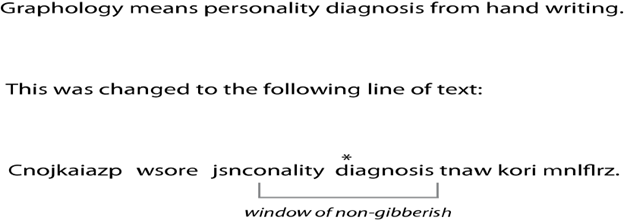

They built an apparatus and programmed a computer such that it could detect where a person was looking on a computer screen of text. The computer's memory was programmed to contain a coherent page of readable text. The computer, however, created a window around the portion of text the person was looking at and displayed the actual text only in that window; any text that fell outside that window was changed to gibberish (a mishmash of random letters). This process was entirely unknown to the subject, who believed they simply were reading a page of regular text on a computer. An example of how this looked is shown in figure 2.1.

2.1 An example of how a line of text would appear to a subject with a point of fixation on the asterisk.

Source: Reprinted by permission from Springer Nature Customer Service Centre GmbH: George W. McConkie and Keith Rayner, “The Span of Effective Stimulus During a Fixation in Reading,” Perception and Psychophysics 17, no. 6 (1975), © Psychonomic Society, Inc.

As the person read the passage of text and their gaze continued to move, the computer shifted the window to keep up with their gaze, changing whatever letters now fell into the window into readable text and reverting the letters that no longer were in the window into garbled text. By varying the size of the window of readable letters, and determining when the person noticed gibberish, the experimenters could tell how many letters a person was able to perceive within a single fixation. In other words, people would notice the garbled text only when the window of nongibberish was smaller than their window of perception. Through this approach, and with follow-up studies, the researchers determined that people identify a letter of fixation and that the window of perception extends 2–3 characters to the left and 17–18 characters to the right (the letter of fixation is indicated by the asterisk in figure 2.1).[50] The exact nature of the window depends on the size and type of text, the size of the words, and the direction of reading—as some languages are read in different directions.

Although interesting, the size of the reading window is not the point for the current discussion; rather, it is the rest of the text that one is not perceiving when reading 20 characters at a time. What came out of these experiments is the appreciation that when reading, people are not aware of the text outside their narrow window of observation. As explained by Steven Sloman and Philip Fernback in their book The Knowledge Illusion: Why We Never Think Alone, the subjects are entirely oblivious to the rest of the document:

Even if everything outside just a few words is random letters, participants believe they are reading normal text. For anyone standing behind the reader looking at the screen, most of what they see is nonsense, and yet the reader has no idea. Because what the reader is seeing at any given moment is meaningful, the reader assumes everything is meaningful.[51]

For all you know, this page that you are currently reading has the very same properties, and you simply cannot tell just by reading it at a normal distance. It is not that the letters that turn to gibberish, which you do not notice turning to gibberish, are outside of your field of vision, but rather that the light photons reflecting off of those letters are still hitting your eyes, but your focus and perception is such that you do not notice them. The only way you perceive them is if they stay gibberish when you look right at them, as in the case of hglaithceayl—flgoenicisth kkjanreiah.

The issue is not limited to odd laboratory situations of coherent reading windows surrounded by garbled text. Consider your ability to focus on a conversation you are having with a person across the table from you in a crowded restaurant. The words of other diners are still hitting your ears, as are all the sounds of the restaurant, you just don’t notice them. Even if you try, you are not able to listen simultaneously to multiple conversations, at least not very well. This is the basis of misdirection in stage magic; what you do not notice happening is not hidden. Rather, the brilliance of the trick is that it is right in front of you, but you do not notice it because your attention is focused on something else. Your inattention to the mechanics of the trick makes you functionally blind to it, so it appears to be magic.[52] This has been called “inattentional blindness,” and it is one way human perception filters input from the world around us.

In their book The Invisible Gorilla: How Our Intuitions Deceive Us, Christopher Chabris and Daniel Simons provide extensive examples of situations in which we do not perceive the world in front of us.[53] A famous example of this is when subjects are asked to watch a film in which players wearing either white or black jerseys are dribbling several basketballs and passing the balls back and forth between them. The viewers are instructed to count the number of passes of balls from players wearing one color of jersey. Some of those watching get the number of ball passes correct, and others don’t, but that is not the point. The point is that 46 percent of people fail to notice the person in a gorilla suit, who walks across the middle of the screen, thumps its chest, and then goes wandering off again.[54] Because the viewers are focusing their attention on counting the passes, they don’t notice the rest of the scene; they have inattentional blindness for the gorilla.

Inattentional blindness is not just a quaint trick that can be evoked with movies of basketballs and gorillas. Rather it occurs in much more serious ways in real life, contributing considerably to motorcycle fatalities in what is described as “looked-but-failed-to-see” crashes. This consists of a vehicle (typically a car) cutting off an oncoming motorcycle. Because of inattentional blindness, drivers are much more likely to perceive an oncoming car, than an oncoming motorcycle, even though both are clearly visible, are moving, and the driver is staring directly at them.[55] Because they are looking for cars, cars are all they tend to see—they are oblivious to the rest of the world in front of them, including the motorcycle.

How Our Minds Distort the Fraction of the Things We Do Perceive

I grew up in the northern suburbs of Chicago. Each winter vacation, our family drove down to Florida for a two-week holiday. Years earlier, while in the military, my father had been in a plane that had bad engine troubles in the air, followed by a very precarious landing, which had convinced him that flying was dangerous. So, our family drove everywhere, because it was safer, at least in our estimation. Our main form of amusement in Florida was hanging out at the beach; however, I had a strong aversion to entering the Gulf Waters. The movie world had just been taken by storm by Jaws; I was not going into the ocean. No, I was going to live a long life and not take any reckless risks, like flying or exposing myself to shark attacks. I am happy to report that my clever strategy and my thoughtful approach to life worked exactly as planned. As of the time of writing this, I have neither been in a plane crash nor have I been attacked by a shark, and by all measures I am still very much alive.

Personal experience, both that you have had yourself and stories you have heard from others (i.e., anecdotal evidence), is important information to consider when making future choices. Avoiding a situation with which you have had a bad personal experience is acting rationally based on available data, albeit a small amount of data. If you have heard stories of people dying while engaging in a certain activity, it seems a good idea to avoid doing it. Watching a news story about how people are being killed in certain situations and then avoiding those situations is just common sense. However, when assessing the relative risks of doing one thing versus another (like driving to Florida versus flying), choosing personal experiences and anecdotal evidence over broad population-based data can be highly problematic. Regrettably, this is precisely what humans tend to do.

As we explored in chapter 1, risk fits the form of a fraction. To properly compare the risk of driving with the risk of flying, my father could have gone to the library and searched for information regarding the risk of dying in a car crash versus a plane crash—today, one could just search on the internet. Of course, many additional levels of detail could be examined to inform probabilistic thinking, such as the airline, the type of plane, the type of car, the route taken, and weather conditions. Our family did not do any of this; in fact, we did not even think to do it—and that is precisely the point. It is a human tendency to navigate issues of risk by simply avoiding things that one hears scary stories about, without considering the actual risks involved.

Cognitive psychologists have made much progress in defining patterns of reasoning that humans are prone to in different situations and under different circumstances. Amos Tversky and Daniel Kahneman were instrumental in developing our understanding of “heuristics.” A heuristic is a process by which human minds rapidly solve complex problems by replacing them with analogous but simpler problems. Heuristics have been described as rule-of-thumb thinking or as a “mental shortcut.” This process can easily be demonstrated using a famous example of purchasing a baseball and a bat. Consider the following problem described by Daniel Kahneman and Shane Frederick:

A bat and a ball cost $1.10 in total.

The bat costs $1.00 more than the ball

How much does the ball cost?[56]

The majority of people will answer that the ball costs $0.10. That way the bat and the ball cost $1.10 cents together and the problem has been solved.

But wait a minute. If one reflects further on the answer, then they will discover a problem: $1.00 is only $0.90 greater than $0.10. So the bat costing $1.00 and the ball costing $0.10 does not fulfill the condition that the bat costs $1.00 more than the ball. The problem can be fulfilled only by making the bat cost $1.05 and the ball cost $0.05.