Mark Kohn

As We Know It

Coming to Terms With an Evolved Mind

The Prime Maxim of evolutionary thinking:

Evolutionary theory cannot say how strong our instincts are.

An evolved mind is not a predetermined mind.

It’s time to get off the savannah.

[Front Matter]

[Synopsis]

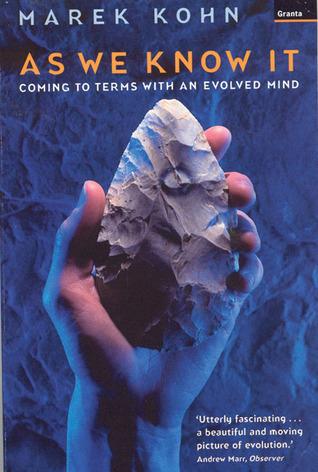

This book provides an imaginative, carefully researched account of how the human mind evolved. It tells us how our immediate ancestors might have thought, and seen the world, in the absence of language, gods or culture. Kohn relates that ancient heritage to our humanity, and examines the influence of our hominid past on our own behaviour, as creatures who speak, symbolize and create.

Central to the book is a fascinating meditation on the handaxe, that beautiful stone blade crafted again and again for hundreds of thousands of years by our proto-human ancestors. In his brilliant reconstruction of the uses and meanings of the handaxe, Kohn takes us into an alien world that is strangely close to our own. He draws on controversial theories of the development of sexual relations in hominid groups to show that the evolution of trust was crucial to the stabilization of human society.

This is a work of socio-biology, in that it applies Darwinism to human culture. Unlike almost all works of ‘evolutionary psychology’ to date, however, it seeks to recapture Darwinism from the political right, and to show that a better understanding of our evolutionary history need not lead to an imposing of limits on who we are and what we may become.

Also by Marek Kahn

Dope Girls: The Birth of the British Drug Underground

The Race Gallery: The Return of Racial Science

[Title Page]

As We Know It

Coming to Terms with

an Evolved Mind

MAREK KOHN

Granta Books

London

[Copyright]

Granta Publications, 2/3 Hanover Yard, London N1 8BE

First published in Great Britain by Granta Books 1999

Copyright © 1999 by Marek Kohn

Marek Kohn has asserted his moral right under the Copyright, Designs and Patents Act, 1988. to be identified as the author of this work.

All rights reserved. No reproduction, copy or transmissions of this publication may be made without written permission. No paragraph of this publication may be reproduced, copied or transmitted save with written permission or in accordance with the provisions of the Copyright Act 1956 (as amended). Any person who does any unauthorized act in relation to this publication may be liable to criminal prosecution and civil claims for damages.

A CIP catalogue record for this book is available from the British Library.

13579108642

ISBN 1 86207 025 3

Typeset by M Rules

Printed and bound in Great Britain by Mackays of Chatham pic

[Dedication]

For Sue and Teo

Contents

Acknowledgements

1. Costs

2. Symmetry

3. Trust

4. Benefits

Notes and References

Further Reading

Index

Acknowledgements

Thanks to Helena Cronin, for introducing me to modern Darwinian theory, for rising to scepticism in good part, and for enabling me (and many others) to listen to the ideas of a wealth of inspiring and provocative scientists; to Rob Kruszynski, for long discussions and comprehensive references in his personal capacity, and for allowing me to hold the Furze Platt Giant in my own hands, as well as letting me see the Museum’s trove of handaxes — more splendid in my eyes than jewels! — in his official capacity as a curator at the Natural History Museum, to which thanks is also due; to Chris Knight and Steve Mithen for papers, conversation and the opportunity to engage with their ideas at length; to Ian Watts, Richard Wilkinson, Kenan Malik, Rosalind Arden, Chris Stringer and Oliver Curry, for similar reasons; to Rob Foley, Geoff Miller and Camilla Power, for their valuable comments on a draft text; to Mark Roberts and his colleagues for allowing me to visit Boxgrove; to Phil Harding for knapping me a handaxe, one of my favourite pieces of silicon-based technology; to Karen Perkins at the Quaternary Section of the British Museum’s Department of Romano-British Antiquities, for showing me some of the Section’s collection of handaxes (like the Natural History Museum’s, but much, much bigger); to my editor Neil Belton, for his work on the book, his sympathetic approach to the difficulties of getting it written, and for his steadfast commitment to my writing, which has given me the ground beneath my feet that any author needs.

Above all, thanks to my wife Sue, without whose love, kindness and understanding I could not have undertaken this project; and thanks also to Patricia Arens and Julie Baldwin for looking after our son Teo, despite whom I finished it.

One: Costs

1

A million years ago there were minds that may have been human, but not as we know it. By that time, beings with minds like these had occupied much of the Old World, from the south of Africa to the east of Asia. Sometime between then and now, our ancestors made an infinitely more important migration, and moved into the imagined world of symbols, language, culture and magic.

They thus became human as we know it, and we are left with the question of how to understand this change of state. On the one hand, we are animals whose brains have evolved by the same processes as any other feature of living organisms. On the other, we now live within a sphere of artificial information in which changes can be made in pursuit of goals, instead of the single blind steps that natural evolution takes, and which in many other respects has a life of its own. The temptation is to see this as a higher realm, and a land of exemptions, where humans run free of the laws that govern all other species.

Humans are special, unarguably, and a partition between biology and culture gives us a tidy scheme within which we can make sense of our lives. But, like many borders, it is largely a fiction, and sooner or later is likely to cause trouble. It also places a barrier in front of one of the most profound and marvellous questions we could possibly ask. How could beings without culture create it, and move into this imagined world? To explore this question, we need to contemplate what minds were like before culture, as best we can. We have to think of our ancestors in the hominid family as Darwinian creatures and nothing but, in order to work out why it was in these beings’ reproductive interests to enter the imagined world, and to specify the conditions under which this transition could have been possible. In short, we need an evolutionary explanation for the origins of culture. Without that, we can’t fully come to terms with an evolved mind.

Perhaps we aren’t ready to do this, but we should; not just for the sake of truth and self-knowledge, but because of the things that are wrong with the way we live, and because we need to recover a confidence that we can make them better. As We Know It offers a Darwinian idea that may help make sense of minds a million years old, and describes a radical evolutionary theory of how language and culture began. They are presented not as pat solutions to great questions, but as illustrations of the kind of questions that we need to ask in order to know our minds.

As far as this book is concerned, questions about consciousness are not among them. It treats consciousness as a critical evolutionary issue in just one important respect, as the basis of theory of mind, the ability to think what another individual might be thinking. It does not attempt to explore the phenomena of consciousness, the redness of red and the wetness of water. Maybe one day these will be convincingly analysed in terms of natural selection, but for the foreseeable future they will remain matters of electricity and chemistry.

Seeing consciousness as the principal question of human mental evolution is a way of keeping faith with the soul by secular means. But a mind in isolation is nothing. If one starts from the mystery of subjective consciousness, the questions are profound, but their relation to everyday life may be tenuous. Coming to terms with an evolved mind is challenging because of the questions it poses about our relations with each other; about how our evolved psychology guides us in our approaches to sex, status and fairness. As We Know It starts with four feet on the ground, and works from there. Hominids are treated as animals in a landscape, finding food and choosing mates, rather than as creatures of destiny. They are treated also as social animals, who have got to where they are today through the evolutionary motive power generated by interacting with each other.

In dealing with such issues, we are faced with the possibility that we know less than we would like to think we know about what is going on in our minds. Freud presented this problem in terms that merely deepened the sense of mystery, describing unconscious mental depths filled with currents of primordial instinct and ancient taboos. While agreeing with Freud that this is largely about sex, modern evolutionary theory depicts a mind fit for a systems analyst rather than a priest, its architecture built from modules and algorithms. To understand its implications, we have to consider the environment to which it is adapted. First and foremost, the environment of mind is society. For that reason, this book ends up not in the depths of the self, but in modern society and its politics.

In making a way to that destination, I have chosen not to follow some of the more familiar routes through human evolution. Instead of concentrating on the artistic achievements of the Upper Palaeolithic, traditionally used to show that people like us were so much more sophisticated than their predecessors, I have dwelt on artefacts made in the Lower Palaeolithic by hominids who had no symbolic culture. Considering these objects, known as handaxes, affords an opportunity to contemplate minds which were radically different from ours, and may or may not be considered human.

When imagining another mind, it is only natural to wonder what it must be like to be that individual. We would not be human if we didn’t — according to the theory behind theory of mind. The means we have for imagining what it was like to be a Lower Palaeolithic hominid are indirect in the extreme. We have to build up a picture of how these ancestors lived using a combination of ecology and archaeology. Working from the ground up, we need to establish what we can about the landscape: its rocks, its watercourses, its vegetation, the sunlight and rain that fell upon it. These conditions shaped the forms that hominid societies took, and the possibilities can be explored by careful comparisons among other living primate species. Having created a context using general primate knowledge, we can try to make sense of handaxes, which are what remains of the hominids’ distinctive character. It may be no more than human vanity, but in will-o’-the-wisps one can imagine moments of being a hominid a million years ago.

2

We do not migrate from biology to culture like settlers moving into a land of opportunity, nor do we shuttle backwards and forwards between the two provinces. We live in both at the same time. This is a complicated state of affairs. The options are to try to understand it better, to maintain the fiction of simplicity, or to declare that the situation is too complicated to analyse.

It is not just a matter of what is intellectually taxing, though, but of what is morally tainted. Many people cannot hear ‘Darwinism’ and ‘society’ in the same breath without thinking of Social Darwinism, the ideology of fitness that gave evolution a bad name. Its most extreme manifestation was in Nazism, and a widespread suspicion remains that any move to bring Darwinism into social affairs will be a step back in that direction. Social Darwinism is easier to recognize than to define precisely, but it is generally taken to incorporate belief in racial hierarchy, concern about the number of children born to people of low social status, and the assumption that success in life reflects innate quality rather than a fortunate position in an unfairly ordered society. Some of its themes are closer to prevailing wisdom than conventional opinion would care to admit.

Social Darwinism is therefore a phrase that reliably triggers moral revulsion, and modern Darwinism disclaims the label vehemently. The philosopher Daniel Dennett is not one to sugar the Darwinian pill as far as its implications for religion are concerned, but when it comes to politics, he feels obliged to denounce Social Darwinism as ‘an odious misapplication of Darwinian thinking in defense of political doctrines that range from callous to heinous’.[1]

In his book, Darwin’s Dangerous Idea, Dennett argues that there is widespread resistance to the Darwinian idea, taking a variety of forms. He concentrates not on those who take the Book of Genesis literally — even though they constitute 48 per cent of the US population, according to a poll he mentions — but on scholars who accept that living organisms are the modified descendants of earlier forms. Dennett relates the history of Darwinism as a series of strategic retreats by its opponents, in which they accept that Darwinism can explain a certain fraction of the world’s great questions, but attempt to draw a line beyond which it cannot pass.

While Dennett focuses on individuals, the process he identifies is well illustrated by the response of the Catholic Church to the challenge of evolution. The Holy See came to terms with Darwinism quite swiftly by its standards. Pope Pius XII gave Catholics permission to accept evolutionary theory in 1950, but insisted that divine intervention had been necessary to create the human soul. In 1996, Pope John Paul II moved the Church’s position from tolerance to endorsement, when he agreed that the theory of evolution is ‘more than just a hypothesis’. While this accentuated the positive side of Catholic engagement with science, it did not alter the strategy of defining spheres of influence. Science was allowed the material domain, as long as it did not entertain pretensions to the spiritual realm. As the Pontiff made clear in later remarks, the Church considered evolution ‘secondary’ to divine action. ‘Evolution isn’t enough to explain the origins of humanity,’ he said, ‘just as biological chance alone isn’t enough to explain the birth of a baby.’[2] The policy could also be seen at work in a conference on the development of the universe, attended by the cosmologist Stephen Hawking, which the Pontifical Academy of Sciences held at the Vatican in 1981. John Paul II set the boundary at the Big Bang, advising the scientists present that they were free to go back as far as that point. Any further, and the physicists would have to hand over to the metaphysicians.[3]

Unlike the Church, with a political understanding of the need for historic compromise developed over two millennia, ordinary people tend not to be overly concerned with dogma. But you don’t have to be a Catholic to share the feeling that something about humanity must be kept sacred. For some, this will be expressed in the idea of a spirit. Others will affirm that those aspects of the human condition which science cannot describe are the ones we should hold most precious. Faced with scientific pretensions to explain fundamental aspects of culture, they might well fear that science was mounting a bid to dehumanize humanity. And once again, that raises the spectre of what happened when Social Darwinism was incorporated into political doctrine.

There is no alternative, though. To come to terms with an evolved mind, you have to learn to stop worrying and love sociobiology.

The word makes a lot of people wince, including many of the science’s practitioners. It made its public debut with the publication of Edward O. Wilson’s Sociobiology: The New Synthesis, in 1975. Thanks to the final chapter, which discussed the human species, the book had notoriety thrust upon it. Critics identified sociobiology as the science department of the New Right, an association which has persisted ever since. ‘Sociobiology is yet another attempt to put a natural scientific foundation under Adam Smith,’ wrote Steven Rose, Richard Lewontin and Leon Kamin. ‘It combines vulgar Mendelism, vulgar Darwinism, and vulgar reductionism in the service of the status quo.’[4]

For Rose, Lewontin and Kamin, Darwinian sociobiology was no more than an updated edition of Victorian Social Darwinism. The political critics of sociobiology saw it as an adjunct to the aggressive ideology of the free market, justifying selfishness, competitiveness, individualism and the survival of the fittest. Their assaults left their mark on the public face of sociobiology, encouraging a second wave of sociobiological thinkers to distance themselves from the pioneers.

These theorists call themselves evolutionary psychologists, and represent what is now by far the most influential current in sociobiological thought. Opinions vary on the difference between sociobiology and evolutionary psychology. According to Donald Symons, a notable exponent of the latter, human sociobiology is one of several labels — he himself favours the term ‘Darwinian social science’ — applied to a school of thought based on the idea that individuals seek to ‘maximize inclusive fitness’. This means that they will always tend to behave in ways which favour the reproduction of their own genes and those they share with their kin.[5] They should therefore do everything possible to maximize the number of offspring who survive long enough to reproduce in their turn, and Symons points out how remiss people are in this respect. A healthy white woman wishing to maximize her fitness in the United States today could bear a baby every year or two, he suggests, in full confidence that each would be adopted by a middle-class family. ‘A reasonably young male member of the Forbes 400 could use his fortune to construct a reproductive paradise in which women and their children could live in modest affluence and security for life (as long as paternity was verified),’ he continues. A wealthy man’s reproductivity could be increased a hundredfold in this way. Meanwhile, men would vie with each other to make deposits in sperm banks: Symons’s mischievous logic implies that they would compete more fiercely over these than they would over actual women. However, he concludes, ‘It is difficult to picture clearly a modern industrial society in which people strive to maximize inclusive fitness because such a society would have so little in common with our own.’

Evolutionary psychology is less convinced that human genes know what’s good for them these days. It sees living humans as creatures shaped by an ancestral environment long since left behind, which it calls the environment of evolutionary adaptation, or EEA. The idea was first raised in 1969 by the child psychologist John Bowlby, in the first volume of his work, Attachment and Loss. Evolutionists then took it over and rephrased it in the modern Darwinian terms of inclusive fitness, rather than the good of the group. In the first instance, the EEA was the savannah, and subsequently expanded to include most environments that the Earth’s land surface has to offer. All these environments supported modes of life that were fundamentally similar, in that they were based on gathering and hunting. Farming only started within the last ten thousand years, a footnote to the chapter of Homo that had lasted a couple of million years. Evolutionary psychology conceives the mind as a collection of adaptations made to address specific problems in the founding environment. In contemporary environments, people’s behaviour will sometimes be adaptive, and sometimes not.

There is no question that this is fundamental to evolutionary psychology’s vision. Nevertheless, evolutionary psychology can be seen as a matured version of 1970s sociobiology, with a better sense of history. Many insiders certainly seem to feel that the choice of name is a political one. In 1996, the Human Behavior and Evolution Society officially dropped the word ‘sociobiology’. One scholar, contributing to an e-mail discussion about the decision, revealed that although he used the term ‘evolutionary psychology’ to describe his university classes, he privately remained ‘a reformed, unrepentant sociobiologist’.[6]

Standard definitions of sociobiology, derived from Edward O. Wilson, refer to the study of the biological bases of social behaviour. Under those terms, evolutionary psychology is a subdiscipline of sociobiology. Despite its unattractive connotations, there are good reasons to retain the earlier term. Evolutionary psychology currently denotes quite a specific body of thought, and using the term ‘sociobiology’ leaves one’s options open. On the one hand, it acknowledges the hardline fitness maximizers, and the ideological cap that fits them. On the other, it suggests the possibility of very different inflections, whether kinder and gentler, or of a different colour altogether. The possibilities run the gamut from Social Darwinism to Darwinian socialism. It is also franker. What we’re dealing with here is not safe, not unproblematic, not easy. But it has far too much potential to be left to the sociobiologists.

3

The Darwin Seminars ran during the period in which this book was written, from 1995 to the end of 1998, and inform it throughout its course. They were a series of lectures and discussions, held at the London School of Economics, and they were a salon as well. The term has been applied to them both pejoratively and approvingly. One’s attitude to salons depends largely upon whether or not one is invited, but these things are an inevitable part of metropolitan life, and science is as entitled to a share of the glamour as arts or letters. The events had another kind of aura, too: they seemed to be bidding — quite consciously — for legendary status, like a nightclub which becomes the hotspot of a new scene; the kind of place people in years to come will pretend they were at.

Organized by Helena Cronin, a philosopher by training, they featured an extraordinary range of speakers. There was Simon Baron-Cohen, who talked about mental modules and autism; David Haig, who discerned in the trials of pregnancy conflicts of genetic interest between mother and foetus; Anders Pape Mpller, who glued tail extensions on to swallows to study the effect on their sexual success (positive, at least at first); Garry Runciman, who spoke about armoured infantry in ancient Greece; Robert Trivers, who dedicated his lecture about self-deception to his former graduate student Huey Newton, once a founder of the Black Panther Party; and a lot of scientists who constructed mathematical models instead of doing experiments. The audience was similarly variegated. As well as biologists and psychologists, you might have encountered archaeologists, palaeoanthropologists, the odd novelist, and plenty of local economists.

The latter did not attend simply because they were on the premises. Like the others, they found in modern Darwinism an intellectual lingua franca that allowed people from different disciplines to participate in a common discussion. This seemed like a refreshing change for academe, where the mode of production forces disciplines to become ever more specialized and inaccessible to outsiders. At the same time, the language had a familiar accent. Modern Darwinism, dating from the mid-1960s, is based on the work of a small group of theorists who shook down evolutionary theory and gave it a new set of key themes. The thrust of their efforts was to base Darwinism on individuals, instead of groups; and then to propose mechanisms by which co-operative behaviour could arise from self-interest. Their point of departure came in 1966, with George Williams’s Adaptation and Natural Selection, which established the principle that nothing in evolution happens because it is good for the species. Williams argued that selection is unlikely to operate at the level of groups, and that the fundamental unit of selection is the gene. Groups are transient and blurred at the edges; each individual created by sexual reproduction is a unique assortment of genes; the only true constant in reproduction is the gene — the ‘selfish gene’, as Richard Dawkins later called it.

During modern Darwinism’s formative period, in the 1960s and 1970s, John Maynard Smith, Robert Axelrod, William Hamilton and Robert Trivers were prominent among those who worked out how to explain co-operation, between both related and unrelated individuals, without invoking group selection. The notion of inclusive fitness, or kin selection, recognized that individuals shared genetic interests to the degree that they were related. Evolutionary game theory shed light on how unrelated individuals might find ways to practise reciprocal assistance without being cheated. No wonder the economists felt at home in Darwinian circles. Liberal economics and biology were singing from the same hymn-sheet.

At first I was bemused and sceptical. The focus seemed to veer from the grist of molecular biology journals to the stuff of popular psychology magazines. I struggled to grasp the former, and was surprised at the readiness of serious scientists to entertain the latter. A packed hall listened to Devendra Singh, of the University of Texas, give an illustrated lecture about what men like to see in women. Singh’s contribution to the search for human universals was evolutionary psychology’s equivalent of the Golden Section, a ratio of 0.7 between the measurements of a woman’s waist and her hips.

Singh reasoned that it is in the reproductive interests of males to avoid mating with females who are already pregnant. It would therefore be adaptive for them to be attuned to cues indicating whether a female is pregnant or not. Since a woman’s waist thickens early in pregnancy, ancestral males who avoided females with unindented figures would have fathered more offspring than males who did not discriminate in this way. Furthermore, Singh argues, a waist wider than the hips indicates susceptibility to illnesses, including diabetes, heart disease and certain cancers. ‘Attractiveness, health and fecundity at a glance,’ as he put it.[7]

To test for signs that such a process of selection had left its mark on the male psyche, Singh showed men line drawings of women with different waist-hip ratios. His subjects consistently expressed a preference for a ratio of around 0.7, and he found this magic number in all sorts of other places. In Britain, he told the audience, he was always asked ‘What about Twiggy? He had checked the vital statistics of the 1960s model, the forerunner of the ‘waifs’ of the 1990s, and found that her waist-hip ratio was close to the ideal, at 0.73.

The logic was sound. As the eminent ethologist Robert Hinde commented, Singh had shown that a preference for relatively narrow waists would have served the reproductive interests of ancestral males. But Hinde objected that Singh had not done the experiments necessary to establish his case. I was left with other reservations. Least importantly, I noted with interest that nobody seemed to object to the titillating undertow of the presentation. Across the Atlantic, the science writer John Horgan was also struck by the same lack of reaction when Singh showed his slides at a meeting of the Human Behavior and Evolution Society. ‘The headless woman in black leather panties has got to be the last straw,’ he gasped, but protest came there none.[8]

There were, however, some doubts expressed about the evolutionary importance of the diseases Singh associated with high waist-hip ratio. These mainly occurred in middle and old age, and in the West, so it seems improbable that they would have had much impact on the number of children women bore in the ancestral environments. They might have had an indirect effect upon the survival of these children, by reducing the amount of maternal care the children enjoyed, but it seems unlikely that they would have been major causes of death among foraging people. Any information that waist-hip ratio provided about their likelihood would therefore have been of questionable reproductive advantage to males, making it less plausible that the mechanisms of selection would have engaged to inscribe a preference for the trait into the male psyche.

Before his talk, Singh had visited the Natural History Museum in South Kensington, to examine replicas of the Palaeolithic figurines known as ‘Venuses’. He found that though the figures they depicted were obese, the proportions of waist and hip were in accord with his theory. This seemed to undermine the adaptive value of sexual inclinations based on waist-hip ratio, since it implied that these preferences induced men to overlook obesity, which is unlikely ever to have been a healthy trait. More significantly, though, his discussion raised questions about the interpretation of cultures. First, the evidence is selective. The ‘Venuses’ are well known; less famous, and less readily readable through the lenses of modern culture or art in historical times, are an equally widely distributed group of female statuettes which are cylindrical rather than globular. Some of them have been found at the same sites as their globular sisters, including Willendorf, where the most celebrated ‘Venus’ was discovered. The very first prehistoric figurine to be dubbed ‘Venus’, found at Laugerie-Basse in the Dordogne region of France, has no head, a trunk with almost parallel sides, a pair of legs that give the figure only rudimentary hips, and a mark between them which is the only clear indication of the statuette’s sex. A number of the statuettes found in Russia are, literally, stick figures.[9]

Leaving these aside, why on earth should anybody assume that a statue made in the Stone Age has a meaning comparable to that of a pin-up produced under modern capitalism? Just because the dominant use of the female form in our culture is as an object of sexual desire, there is no reason to project our perspectives on to an artefact 20,000 years old. They may have represented matrilineal inheritance; though full-blown matriarchy, proposed by Victorian archaeologists, has now fallen from favour, except among the goddess tendencies in feminism. Some of them may have represented pregnancy, or conditions of plenty in which people might grow fat, or women’s power, or something else altogether. According to at least one strand of medical opinion in the earlier twentieth century, the Venus of Willendorf is an anatomical illustration of endocrine obesity, ‘an index of the sedentary, overfed life of woman in the prehistoric caves’.[10] One ingenious proposal is that the statuettes represented the artists, women depicting how their pregnant bodies looked as they stood and gazed down at themselves.[11] Another recent suggestion, from the palaeoanthropologist Randall White, is that they were magical objects whose primary purpose was to protect mothers during childbirth.[12] Meanwhile, evolutionary psychology is content to remain on the level of pin-ups.

Assumptions about existing cultures may not be warranted either. To test the possibility that his results merely reflected western tastes, Singh gave his questionnaires to visiting Indonesian students. They may have been more familiar with Western images of women than he supposed, though, particularly since they were Christians rather than Muslims. Under the bombardment of images that would have begun before they got off the plane, they may have rapidly learned to associate a particular kind of figure with glamour and sexual allure; or they might have been inclined to give responses which they understood to be appropriate in their host culture.

This is not to deny that Singh has a hypothesis which can be investigated. But you can’t grasp the universals of human nature without recognizing cultural differences. When Singh’s drawings were shown to men from a population who have remained more isolated than most, the Matsigenka of southern Peru, higher waist-hip ratios were preferred to lower ones. Among a more Westernized Matsigenka group, men considered lower waist-hip ratios more attractive. And preferences in a third, even more Westernized group from the same region were indistinguishable from those of North Americans.[13]

Anthropologists and archaeologists are concerned above all with context. They become understandably vexed when they hear evolutionary psychologists proposing universal human truths without attempting to set their claims within the context of anthropological or archaeological knowledge. The undercurrent of antagonism is mutual. Evolutionary psychologists are advancing a new paradigm, based on the idea of an evolved human nature, against what they call the ‘Standard Social Science Model’. The term was coined by John Tooby and Leda Cosmides, and is sufficiently current to be known by its initials, as though it were a technical abbreviation rather than a rhetorical device. It denotes the view that ‘the contents of human minds are primarily (or entirely) free social constructions, and the social sciences are autonomous and disconnected from any evolutionary or psychological foundation’.[14]

Ironically, the mood of much discussion in evolutionary psychology is strongly in favour of keeping the social sciences disconnected from Darwin. ‘Standard’ social science is regarded as bathwater without a baby, a body of theory with nothing useful to offer evolutionary psychology. The implication is that adequate new social sciences can be built entirely within a Darwinian paradigm. Many evolutionary psychologists will naturally protest that this is a caricature of their position, or deny that such an attitude exists. But although one commonly encounters derogatory references to the SSSM in evolutionary psychological discussions, it is much rarer to hear calls for partnership between evolutionary thinking and established social science, or for integration between them. The assumption seems to be that there is only room for one paradigm.

A good example of how pluralism can work in practice comes from the work of Martin Daly and Margo Wilson, whose book Homicide is often, and justifiably, cited as a showpiece of evolutionary psychology.[15] The Darwinian structure of their thought is illustrated most clearly by their work on the killing of children by family members. They chose killing, not because of its drama but because of the relative reliability of statistics on it. Kindnesses within households are harder to measure than abuses, and the harm least likely to go unreported is homicide. Daly and Wilson reasoned that since step-parents did not share a lineage of genes with their step-children, they would be more likely to harm them than would biological parents. They found their hypothesis spectacularly upheld. Their findings, which draw upon figures from Canada, the United States, England and Wales, show a consistent pattern. For babies and very young children, the risk of being killed by a step-parent is between fifty and a hundred times higher than that of death at the hands of a genetic parent. They have concluded that being a step-child is ‘the single most important risk factor for severe child maltreatment yet discovered.’[16]

One possible objection to their analysis is that this reflects upon the kind of person who becomes a step-parent, rather than upon the operation of mechanisms favouring inclusive fitness. But when violent step-parents live with both step-children and their genetic children, they tend to spare their own kin. In any case, the finding stands as an empirical indicator of risk, which can be taken into account when social services are considering a child’s welfare. If a child is at increased risk through living with a step-parent, it is important to know this, regardless of the underlying reasons for their vulnerability.

This point also addresses another criticism, often levelled at evolutionary psychologists: that they are merely stating the obvious. Suspicions of this kind had nagged at me through the Darwin Seminars until I heard David Haig make sense of phenomena in pregnancy — high blood pressure, diabetes, massive washes of hormones — that are physiological, marked, and hard to explain other than as struggles over resources between foetus and mother, arising from conflicts of genetic interest. It ran completely against intuitions based on conventional assumptions about the intimate bond between a mother and the fruit of her womb, yet I found it immediately convincing. Others did not. In a letter to the New York Times, Abby Lippman called it an ‘outrageous’ suggestion which ‘betrays the astonishing assumption of patriarchal societies that fetuses are separate entities that happen to grow inside women’s bodies’. Lippman, a professor of epidemiology, insisted that the uterus is not ‘the site of a protracted battle between competing genomes, but the nourishing, life-sustaining part of a woman’s body, where mother and wanted fetus cooperatively coexist.’[17] So anxious that it felt the need to assert that every foetus is a wanted foetus, her rhetoric overwhelmed its own efforts to mount what could have been a salutary critique of scientific metaphor, and failed to provide alternative explanations for the phenomena Haig had adduced as evidence.

Daly and Wilson’s response to the charge of truism is that if it was so obvious, why did the social sciences fail to notice it? Daly and Wilson sound like Darwinian partisans when they talk about the failure of standard social science to realize that folklore and folk wisdom were right on this score. When they began their investigations in the 1970s, they tartly remarked in their essay The Truth about Cinderella, ‘most of those who had written on step-family conflicts apparently believed that the problems are primarily created by obstreperous adolescents rejecting their custodial parents’ new mates’.[18] Nobody had asked whether having a step-parent increased a child’s risk of being injured. ‘It’s got to be more than merely an oversight, it’s got to be motivated neglect,’ Daly declared in an interview.[19]

Some of their other remarks might also have caused uneasy twitching in liberal circles. They had observed that US inner cities show an anomalous pattern of homicide rates among couples, compared to the suburbs or cities in their native Canada. Typically, men kill their female partners several times more often than women kill their men. In this dimension of US inner city death, however, sexual equality had been achieved. Daly and Wilson first thought that the prevalence of guns might be the reason, but they found that women tended not to use firearms to kill their partners, and killings by other means show the same sexual balance. The pattern was not the same across all ethnic groups: Latina women almost never killed their husbands.

Daly and Wilson did not, however, explain this difference in terms of racial character. They saw it as the product of social relations. Latino communities are, in anthropological jargon, patrilineal and patrilocal. This means that material resources are transmitted through the male line, and men stay within their communities instead of leaving to join others when they enter marriage or similar relationships. Latina women are thus surrounded by their partners’ relatives and isolated from their own. Among urban African-Americans, by contrast, the central resource of housing is transmitted down the female line, as women get entitlements to leases via their mothers or sisters. Black women gain strength from being in networks of kin, whereas black men lack equivalent support. There is also a higher turnover of partners among African-Americans than among the Catholic Latinos, and so more step-children. Some of the domestic homicides may have occurred when women attempted to defend their children from attack by step-fathers, Wilson speculated, observing that there were no shelters available to these women, nor did they have effective access to help from the police. Killing their partners could be seen as a form of ‘self-help’.

Daly and Wilson started out studying the behaviour of rodents and monkeys, and still maintain a research interest in the rodents. ‘Perhaps we’re pre-adapted to treat Homo sapiens as just another critter,’ Daly observed. That’s the kind of talk that makes anthropologists shudder — particularly when the Homo sapiens in question are black humans at the lower end of the social scale, being discussed by white ones comfortably lodged in the upper reaches. Anthropology and sociology are only too painfully aware of the oppressive potential inherent in such analyses. Frederick Goodwin had to resign his post as chief of the US Alcohol, Drug Abuse and Mental Health Administration in 1992, after commenting that it might not just be ‘a careless use of words’ to refer to inner cities, which had lost some ‘civilizing’ elements, as ‘jungles’.[20] For those who take the sociologist Durkheim rather than Darwin as their intellectual patriarch, the savannah may seem just as dubious as the jungle.

Given the state of theoretical play in the social sciences at the moment, it is easier to talk about representations than solutions. Talking to Martin Daly and Margo Wilson, I became confident that they were instinctive liberals, in disposition as well as ideology — and in contrast to the brittle liberal veneer that some other sociobiologists feel obliged to adopt. It wasn’t just that they referred to the fact that Canada has nationalized healthcare, which mitigates inequality and leaves fewer of the poor in a state of desperation, as a factor relevant to the pattern of domestic homicide. It was that they seemed to be genuinely pluralistic thinkers, who wanted to get Darwinism working productively together with other schools of knowledge, rather than trying to replace the social sciences with biology. People frequently imagine Daly and Wilson are proposing that the murder of step-children is an adaptive act, in which individuals dispose of rivals for their own children, actual or potential. Such things happen among langur monkeys and lions, but although Daly and Wilson recognize that humans are also animals, they do not make the mistake of thinking that we are the same as particular animals. Nor do they draw a conservative moral from their statistics, to argue that step-families are a pathological departure from monogamy. They point out that step-families were common in the last century, and probably throughout human history, because people remarried after the early death of spouses. Ancestral environments, they believe, may have encouraged a readiness to take on step-families. Being a parent is extremely difficult: when the child is one’s own, evolved mechanisms underpin the commitment that is necessary. Without the support that comes with kinship, it is much more likely that an adult will fail to meet the commitment. The homicide statistics reflect the most extreme instances of this failure.

Another way of putting it is to say that step-parents are less likely to live up to their roles. Daly and Wilson argue that this is an insidious metaphor which encourages us to think that life is theatre. They also consider it to have unwarranted intellectual pretensions. ‘There is no theory,’ said Martin Daly, . It seems to be nothing but a metaphor.’ Yet they both acknowledge that talk of roles has produced the notion of ‘scripts’, which they accept can be a useful tool for making sense of how families work. It seems to be the utility of the idea that counts, not where it came from. Margo Wilson summed up their discussion of spousekilling in US cities by pointing out three principal factors: matrilineality, step-families and poverty. The first is a concept derived from anthropology and primatology; the second comes from their reading of evolutionary theory; the third is a sociological and political perspective. They make sense together, and they lead to an analysis which the individual currents could not reach on their own.

‘We’ve got to synthesize evolutionary understandings and cultural understandings, not pit them against each other,’ said Daly. Daly and Wilson’s theory, which has led to studies in women’s refuges and new tools for social work, illustrates what such syntheses can achieve in practice. You can start from rodents and still end up on the side of the angels after all.

4

My fascination with prehistoric humankind was kindled a few years ago in a visit to a small group of caves at Cougnac, in the French department of Lot. The drawings on the cave walls were thought to have been made around 18,000 or 16,000 years ago, the better part of 10,000 years before people settled down to farm the land. It seemed an extraordinary span, though in terms of human origins it rapidly comes to seem like the day before yesterday (as do the revised dates, ranging as high as 25,000 years, which have subsequently been obtained from tests performed on the pigments).[21]

In one of the chambers I was able to spend a few moments on my own, standing an arm’s length away from an image of an ibex, a relief created from ochre and the natural contours of the rock. In that arm’s length was the dizzying thrill of simultaneous presence and unimaginable distance. To stand on the brink of such an image, on the verge of communication with a mind more than 500 generations away, was as enthralling as the sight of the stars in the heavens.

I had put myself in the artist’s place, standing where the artist must have stood. I could imagine reaching out, in the light of a guttering oil lamp, and making a line on the rock. Within this narrow frame of vision, I knew roughly how it had looked to the artist. Beyond that, it seemed, all else was a shimmering curtain of maybes. Maybe the artist was a man, maybe a woman; maybe the ibex was part of a ritual, maybe an image of beauty, maybe a record of an event, maybe something beyond modern imagining.

To put oneself in another’s place is a fundamental operation of consciousness, requiring theory of mind. Primatologists debate whether apes have it; developmental psychologists wonder when children acquire it. This book explores theories of mind in a different sense; scientific theories about ancient minds. The impulse behind it, though, is the fascination of the possibility that we can achieve theory of mind across geological time; that we can put ourselves, to however limited a degree, in the place of our ancestors.

At Cougnac, new to prehistory, I thought that all one could do was make up stories. Since then, I have learned that although certainty about human origins is an endlessly receding horizon, there are devices we can use to take us closer to that horizon.

5

A story need not be just a story. A theory need not be just a theory. Formally, evolution may be a theory, but it has consistently passed the tests set it, and to all intents and purposes it is the plain truth. The palaeontologist Stephen Jay Gould is clear on this point, but he is profoundly sceptical about the application of evolutionary theory to human behaviour. He has endorsed the project in principle: ‘Humans are animals and the mind evolved; therefore, all curious people must support the quest for an evolutionary psychology’ — but not, it appears from the rest of his article, if the quest is being pursued by evolutionary psychologists.[22] Following his cue, many among the lay public have learned to presume that evolutionary stories about people are ‘just-so stories’, a dismissive phrase he has popularized. Sometimes, when scientists are in a self-deprecating mood, they also refer to ‘the stories we tell’, as if they were just stories.

Gould has also drawn his readers’ attention to the importance of checking original texts, instead of relying upon secondary accounts of them. Rudyard Kipling’s original Just-So Stories for Little Children appeared at the turn of the century, at the zenith of the British Empire and the nadir of Darwinism, when many scientists had lost interest in Darwin’s idea. Since then there has been a reversal of fortune, dating in fact from around that time, when scientists rediscovered Mendel’s work on the mechanism of genetic inheritance. Darwin is now held in awe, if not in universal affection, while Kipling is widely regarded as a deplorable old imperialist, although his poem ‘If’ remains a favourite with the British public. Not all his lines remain pleasing to the modern ear, though. ‘The Ethiopian was really a negro, and so his name was Sambo,’ he jocularly confides to the little children in ‘How The Leopard Got His Spots’, having previously used a worse word than ‘negro’.[23]

Many people would see remarks like these as pollution, which foul the rest of the text like spit in a glass of milk. The only safe and proper reaction is to throw the lot down the drain. As the century has begun to wind itself down, though, a new perspective has arisen in certain quarters. Reconciliation and mutual understanding have come back into favour. In that spirit, perhaps it might be instructive to apply a different model to unsatisfactory writings of the past. Instead of seeing a text as a fluid, in which any toxic trace contaminates the whole, it could be considered as an assembly of components. Software is the obvious analogy, which implies that it can be debugged. If the flaws are really extensive, then one constructive option might be to strip out the serviceable elements and incorporate them into a new architecture. Maybe the Just-So Stories could be turned into something more than just stories.

Not all of them are suitable for modernization. Some are to be taken as one finds them, since one either accepts the morals they are designed to illustrate, or one doesn’t. The rhinoceros stole the Parsee’s cake, so the Parsee rubbed cake crumbs into the rhino’s hide, and that is how the rhinoceros got his rough and lumpy skin. The camel said ‘Humph!’ every time he was urged to work for men, as is the duty of all animals. It seems a rather restrained response, especially for a camel, but a djinn punished him by giving him the hump.

‘How The Leopard Got His Spots’ is a somewhat different case, being more concerned with telling a story than pushing a moral, and it contains a grain of truth in the wrapping of a fable. It begins in the High Veldt of South Africa, a desert habitat dotted with tufts of grass. Like all the other features of the environment, the grass is a uniform ‘yellowish-greyish-brownish colour’, and so are the animals. The herbivores include giraffe, zebra, eland, kudu, bushbuck, hartebeest and the now-extinct quagga. Their predators are leopard and human, the text being taken from Jeremiah chapter 13, verse 23: ‘Gan the Ethiopian change his skin, or the leopard his spots?’

In those days, animals lived a very long time, and eventually the prey learned to avoid the predators. Some of the herbivores migrated to a forested environment, in which the foliage produced an uneven illumination pattern of ‘stripy, speckly, patchy, blatchy shadows’. To avoid standing out in these conditions, the animals developed camouflage patterns; the blotches of the giraffe, the zebra’s stripes, delicate lines like bark on the eland and kudu. ‘Can you tell me the present habitat of the aboriginal Fauna?’ the Ethiopian asked Bariaan the Baboon, archly acknowledging the natural sciences. Bariaan, a regional oracle, replied that the aboriginal Fauna had joined the aboriginal Flora. Eventually, the predators worked out from Bariaan’s Delphic remarks that they needed to move into the forest, and adopt similar cryptic colouring.

Kipling invokes two agencies by which external appearance can be changed. One is Lamarckian, whereby characteristics acquired during an organism’s lifetime are transmitted to future generations. The other is magic, whereby an organism can change its skin at will. Natural selection it isn’t, but adaptation it is.

If we are to be positive here, and try to build on this shaky point of contact with science, we must first drop Lamarck and magic. In the process, we can modify the awkward device of longevity employed by Kipling. Instead of individual animals living a very long time, we need a breeding population to persist long enough for new traits to arise, through the effect of selective pressures acting upon variations that appear within the population. Herbivores that are blessed by genetic chance with blotches or stripes will be less vulnerable to predators; they will thus leave more offspring than their unpatterned companions; over the generations, the traits will be developed and fixed. Similar pressures produce spotted leopards.

Next, the ecology needs to be examined. A gaping hole appears immediately. If the herbivores were happy in the desert, presumably subsisting on a diet of yellowish grass, how did they adapt to the very different conditions of the forest? Evolutionists sometimes refer to pre-adaptation, in which a trait that has evolved for one function turns out to be useful for something else when circumstances change. Stretching a point, you could argue that the giraffe’s neck was pre-adapted to the forest habitat, but that begs the question of what the beast was doing on the treeless veldt in the first place. Even if the other species also proved adaptable to wooded conditions, the radical change of environment would surely have a big impact on their way of life. Some of the most important insights of behavioural ecology concern the effect on social relationships of how resources are distributed; whether dotted in patches across the landscape, as in the desert, or evenly spread, as in a lush forest.

At the same time, reality checks are needed. Depending on what sources are available, these may include reference to the fossil record, and to whatever information is available about environments in bygone times. They will also include comparisons with what is known about the behaviour and distribution of living members of the species in question.

Finally, it’s desirable to run an audit for each adaptation, weighing the benefits to the individual against the costs. Hanging in the balance are the costs of finding food in each environment, the likelihood of falling victim to a predator, the amount of competition for resources in the different environments, and the relative concentration of parasites. In many respects the forest may be more congenial. There will be more food, in greater density. The climate may be less extreme, making it easier for an animal to maintain an adequate intake of water. There will be less need to defend against carnivores by gathering together in large groups, one of the main tactics employed in open country. This may reduce conflicts between members of the same species. On the debit side, forests tend to contain more creatures from a wider range of species than other habitats. Many of these are parasites of various unpleasant sorts, from ticks to viruses. And although food may be more plentiful, there will be more competitors for it, employing a wider range of tactics. For the animals in Kipling’s scenario, it would be like moving from a small village to a big city.

Children a century on from Kipling do not just read stories. They play simulation games, using computers, and that is what scientists do to examine evolutionary or ecological issues. It would be a relatively simple matter to assign some values to the different factors in the Kipling scenario, and use them to animate a little world on a computer screen in which animal icons move from veldt to forest. With the microprocessors calculating the costs and benefits, the rates of change under various conditions could be observed. If the system reached an equilibrium containing stable populations of all the species, then Kipling’s ending, in which everybody lived happily ever after, could be said to be plausible.

It would also be possible to specify the range of conditions under which the Kipling scenario could work. Altering the simulated climate by changing the temperature settings, for example, would have an effect on the density of the vegetation. At some point the forest would cease to be able to support the herbivores. This level could then be compared with palaeoclimatological data, to give an idea of when the leopard might have got his spots, as well as how.

By this point, the tale of how the leopard got his spots would have become more than just a story, it would now be a working model. A model is more accountable than a story. It is governed not by a despotic narrator, but by the rule of law. The elements within it are not controlled directly by the narrator, but act according to the rules of the game. If a storyteller chooses to disregard a character, that character ceases to play any part in the story; whereas the elements of a model carry on interacting whether the model-builder is paying attention to them or not. A model can be set rules which are shared by other bodies of knowledge, allowing it to be connected to them and rendering it transparent to external scrutiny. Stories can be told; models can be tested. That’s science; and that’s the tale of how one story gets to be better than another.

Some people might object that processing texts in this way takes the poetry out of them. The loss might not be too great in the case of Kipling, who ‘wrote poetry like a drill sergeant’, according to W. H. Auden. It is true that the human touches are discarded as the narrative is stripped down to its structural elements, and that science aspires to be impersonal — officially, anyway. But poetic structures are not the only ones capable of containing the ions and fluxes of charged imagination. Although the sketch for the Leopard’s Spots model employs pretty conventional ideas about camouflage, one of the species in the model has inspired scientists to bolder flights of reason. Nearly all zebras live in substantial herds on arid plains, where vegetation is sparse. There is nothing for their stripes to blend in with, except the rest of the herd. One school of thought holds that the effect of the stripes is to make it hard for an attacker to distinguish one zebra from another, and therefore to choose an individual as a target. An alternative interpretation comes from Amotz and Avishag Zahavi, a particularly bold pair of evolutionary thinkers. ‘If the stripes were meant purely for camouflage, they could have been random, like a leopard’s spots,’ they argue. The stripes act not to draw attention away from an individual, but to accentuate parts of that individual, including lips, hooves, legs, neck and rump. These markings may send messages about the animal’s condition to a variety of receivers, both friendly and hostile. ‘A predator looking for easy prey, a rival evaluating his chances in a contest, a female looking for the best father for her offspring, all can benefit from evaluating the muscles of the rump.’[24]

The Zahavis’ remarks about the zebra occur in a spectacular account of signal evolution, of which much more later. For now, it’s an opportunity to note that their ‘Handicap Principle’ is a clear example of how a story can be turned into a model. When Amotz Zahavi first published his ideas, he was more successful in piquing interest than winning acceptance, which did not come until other theorists published mathematical demonstrations that the Handicap Principle might work.

The critical stage in building a model is not applying the algebra. An idea does not have to be translated into numbers, though if that can be done, so much the better. What really matters in assessing a proposition about evolution is that both the benefits and the costs are taken into account. A story that weighs this balance will have a tautness missing from one which simply suggests an adaptation that it might be nice for an organism to have. A just-so story is like the M25, London’s orbital motorway. On a true motorway, lane procedures are the keys which keep the system taut and sprung. Each driver is supposed to drive along in the lane nearest the verge, moving into the next lane to overtake, and then into the lane nearest the central reservation if necessary. When the traffic is not too heavy, the aggregate effect of drivers following this simple procedure is to maximize the efficiency of the system, allowing most vehicles to travel as near to the speed limit as they want to go. It gives motorway driving a shape, and it gives the driver a sense of purpose. As the weight of traffic increases, its effectiveness diminishes and the logic of motorway driving is undermined. On the M25, congestion is so bad that the collapse of the system has been institutionalized. Drivers are instructed to stay in the same lane, removing the raison d’etre of a motorway. The M25 is just columns of traffic running next to each other. A road in which the vehicles do not really interact is like a just-so story whose agents do not really interact.

Another way in which many just-so stories resemble the M25 is that they are circular. A good evolutionary story has the sprung tension of a clear highway, and it goes somewhere.

The Prime Maxim of evolutionary thinking:

Always consider the costs.

No, it is not coincidental that cost—benefit Darwinism is flourishing in a period during which fiscal rectitude has been sanctified as the supreme public virtue, by governments with a deep aversion to levying taxes. This new moral order is affirmed everywhere, from the office manager’s memos to the dictates of the World Bank. In the public sphere, it affirms the individual and devalues the collective, just as neo-Darwinism affirms that selection acts on individuals, but not on groups.

There are still pockets of resistance. Quoted in John Brockman’s collection of interviews with scientists, The Third Culture: Beyond the Scientific Revolution. Lynn Margulis rejects not just the modern Darwinism of the last thirty years, but also the older ‘neo-Darwinism’ which began to emerge in the 1920s. According to accepted wisdom, the link that Darwin missed was the connection between his theory and Mendel’s model of genetic inheritance. One of the great missed opportunities in the history of ideas is the copy of Mendel’s paper still preserved in Darwin’s library, its pages uncut. Neo-Darwinism made good that omission. According to Margulis, though, the two theories are incompatible. She compares neo-Darwinism to phrenology, and predicts that it ‘will look ridiculous in retrospect, because it is ridiculous’.[25] She recounts how she asked the biologist Richard Lewontin why ‘he was so wedded to presenting a cost-benefit explanation derived from phony human social-economic “theory”’.

Margulis’s own story is, in the apt expression Daniel Dennett uses in the comment which follows her interview, delicious. She had a brilliant idea which anybody could grasp, but which the scientific establishment laughed at. Now her peers have accepted that she was right. Up to a point, that is. She is still living in a paradigm of her own. Her grand theme is that change in living things happens by merger rather than by mutation. One of the most profound transformations in the evolution of life was the appearance of cells with nuclei. This may have occurred, she argues, through a predatory invasion of one bacterium by another. Assault turned to alliance, as the two forms integrated themselves together. Nucleated cells also have structures called mitochondria, which serve as a source of energy. Though they function as sub-cellular organs, or ‘organelles’, mitochondria have their own DNA and look like cells within cells; which is exactly what Margulis says they are.

On mitochondria and nuclei, Margulis’s ideas are now accepted wisdom. Her peers remain unpersuaded that such processes are the dominant mode of evolutionary change, or that mutation cannot provide enough material upon which selection can act. In their quoted comments, Daniel Dennett and George Williams both make counter-charges of ideological bias. Dennett expresses regret that, in his opinion, Margulis is trying to politicize the idea of symbiosis, as a tool to promote co-operation instead of competition. Williams points to her support for James Lovelock’s Gaia hypothesis, which proposes that life on Earth behaves as a united entity to maintain the environmental conditions that support it. He suggests that ‘she wants to look out there at nature and see something benign and benevolent ... Whereas I look out there with Tennyson and see things red in tooth and claw,’ he remarks.

‘Time will tell,’ Williams concludes, ‘and will show that my approach is more fruitful in generating predictions about discoveries we’re going to make.’ In a scientific exchange of views, that is the last word to have. Predictive power is the measure of a theory or a research programme. It is a way of saying that an idea can be put to good use.

This sense of practicality is one of modern Darwinism’s most attractive features. Stephen Jay Gould calls adaptationism ‘the British hang-up’, and sees Britain as a hotbed of‘Darwinian fundamentalism’. British theorists tend to operate on the presumption that any trait of an organism is an adaptation produced by evolutionary selection. On the continent of Europe, theoretical biology has traditionally paid more attention to the structure of organisms. Somebody like Brian Goodwin, the Open University biologist who argues that the influence of structural logic on living forms has been massively underrated, is a continental thinker who finds himself on an uncongenial island. Gould’s sympathies are with the continentals.[26]

Maybe the heterodox evolutionists are in possession of some vital truths. Perhaps the broad vision of Lynn Margulis will eventually be accepted, as well as the nuclei and mitochondria. Possibly the evolutionary theorists David Sloan Wilson and Elliott Sober are right to argue that group selection really does happen after all; or possibly John Maynard Smith is correct to say that the differences between them and orthodoxy are only semantic.[27] But one distinguishing characteristic of orthodox, reductionist neo-Darwinism is its productivity.

It even has something to say about Gaia. According to James Lovelock and his colleagues, algae in the sea produce clouds. Directed by the Gaia hypothesis to look for a compound which might transport sulphur, an element essential to life, scientists have discovered that marine algae emit a gas called dimethyl sulphide. Reaching the air, this could react with oxygen to form tiny solid sulphate particles. Water vapour could condense around these seeds to form clouds, which would reflect sunlight away from the surface of the planet, and thereby help to cool it. Warmer temperatures would cause the algae to flourish, producing more dimethyl sulphide, which would stimulate cloud formation, and lower the temperature again. Algae thus act as a living thermostat; cooling the Earth by as much as four degrees, according to one estimate.[28]

The difficulty for conventional evolutionary theory is that it’s easy to see how algal dimethyl sulphide production might be good for the planet, but less easy to see what is in it for the algae. William Hamilton, one of modern Darwinism’s most influential theorists, became intrigued by the problem. A chemical precursor of dimethyl sulphide had a possible use as an antifreeze — but that would not explain why it is produced by algae living in warm seas. Hamilton speculated that the cells might need protection if they were to be transported high into the air. If algal cells could get airborne, they could take advantage of the winds to disperse, like pollen. Together with Tim Lenton, an atmospheric chemist, Hamilton worked out a hypothesis in which the crucial role of dimethyl sulphide is to create upward air currents. This it does by producing the sulphate particles; as water vapour condenses around them, heat is released, warming the air, which duly rises. Algal cells already airborne are carried with it, and the breezes that the air currents create at sea level help draw algae from the surface of the sea into the air. Many algal blooms are known to be clonal, each cell containing the same genes, so the genetic interests of a cell up in the clouds might well be identical to those of one in the waves below. The model thus illustrated the possibility that what might be good for one algal cell might be good for many others, and for the planet as well. Gaia and modern Darwinism might be reconciled — though it’s not exactly what Lynn Margulis had in mind when she spoke of mergers and symbiosis.

In their very different ways, the cases of the algae and the stepchildren powerfully suggest that modern Darwinism can be integrated with other ways of understanding the world. They also show that the formal requirements of evolutionary theory can be what imagination needs to support its flights, rather than constraints which keep it earthbound. Nor is it necessary to accept the rules as final truth. You can accept that reductionism has its limits, while sticking faithfully to reductionist principles when working with scientific ideas. Reductionism gets results — many more, so far, than rival methods — and these results are woven together by the common understandings created by the rules of the programme.

Humans are always close to their biology. They are of course inseparable from it, always animals as well as people, always living through both their nature and their nurture. These pairs are not opposites, just different aspects of the same thing. Perhaps, at the turn of the new century, this may be a good time to turn over a new leaf; to go beyond the opposition between soul and flesh that is so fundamental to Christian tradition. Descartes’s dualism of mind and body usually appears in histories of science as a superseded concept: perhaps now is the time to blow away its lingering spirit. When both aspects seem important in addressing a question about human affairs, it should be possible to take them as a whole. It will not always be a harmonious whole, but its tensions should be creative.

Sex is the natural theatre in which to work some of these tensions through. As well as being a matter of public interest, it is what makes the world of evolutionary psychology go round. The idea that the sexes have different reproductive interests is profoundly simple, like Darwin’s original idea of natural selection. Unlike Darwin’s world-transforming idea, differences of reproductive interest have been recognized from time immemorial. Modem Darwinism tapped the potential of the insight by placing it in a scientific matrix. Its ability to sit happily within both folk and scientific wisdom has since given evolutionary arguments about sex ready access to the media at large. Inevitably, one of the results has been a spate of answers to questions such as ‘why men don’t iron’ (the title of a British television series on evolutionary psychology) which assure the public that science is now back in tune with folk wisdom about human nature.

Folk wisdom is based on generalization, not variation. If most men don’t iron, folk wisdom decrees that men don’t iron, period. A man who does iron is therefore anomalous at best, and at worst is not considered to be a proper man at all. Instead of embracing a spectrum of behaviours within the normal range, accounts in the folk idiom depict a stereotypical norm and pathological deviations from it. Why Men Don’t Iron presented a woman who enjoyed building model railways — and had an abnormally masculine hormonal constitution. Although the dominant cultures in North America and a number of European countries take a relaxed view of men who iron, the normative influence of folk wisdom persists even in these regions. Stridently conservative accounts like Why Men Don’t Iron, relentlessly declaring that efforts to challenge gender roles have simply highlighted the irreducible differences between male and female minds, are accepted with little demur.

Evolutionary psychology is capable of a broader vision. Understanding variation as it does, it can appreciate that behaviour will cover a spectrum. Some men will do all their own ironing, some will do none, but all of them fall within the normal range. In its more elaborate forms, based on game theory and John Maynard Smith’s concept of the evolutionarily stable strategy, evolutionary psychology may take the view that it is a good idea for males to be able to pursue different strategies. If all of them are competing to be Iron Men, hard as nails, then the hardest will take all and the rest will be disappointed in reproductive success. It would make sense for some to compete as Ironing Men, offering resources other than tough genes and protection similar to the kind given by the Mafia.

Having set itself the task of describing a universal human nature, though, evolutionary psychology gravitates towards the norm. Peering at the tumult of human behaviour, its eyes light upon what makes evolutionary sense. That leaves a vast amount, much of it what many people would probably consider most interesting. An easy way to make an inventory covering a significant proportion of these all too human foibles would be to consult a few contemporary art catalogues, or similar postmodernist texts. These speak of gender rather than sex, homosexuality rather than heterosexuality, polymorphous perversity rather than reproductive success; of cyborgs made of flesh and silicon, rather than humans adapted by natural selection; of what women could be, rather than what evolutionary psychology says they are inclined to be.

In its own way, through its contortions, obfuscations and prevarications, postmodernism is trying to keep the flame of possibility alive. That is why it is confined to the world of academic discourse, while the world is governed by ideologies of limits. Among the wealthy democracies, where the proportion of voters who pay taxes is high, public spending is strapped by the ability of political parties to offer lower taxes than their rivals. The leadership of Britain’s Labour Party has this insight carved on its collective heart. One of the intellectuals closest to it, Geoff Mulgan, has long had an interest in evolutionary theory. ‘The ideas contained within liberalism, the Enlightenment and Marxism that humans can make themselves what they want to be — an argument that reaches its apotheosis in Foucault with the image of humans as pure self-creation — is extremely dangerous,’ he told Kenan Malik in the New Statesman, six months before Labour won the 1997 general election and he was appointed to the Downing Street Policy Unit. ‘... Just as ecological understanding has shown there are all sorts of external limits to what humans can do, so evolutionary psychology shows parallel internal limits, which wc transgress at a high cost.’ Achieving androgyny would be expensive, for example, since it would be difficult to make men less promiscuous or territorial.[29]

At the time he made these remarks, Mulgan was director of the Demos think-tank. Demos devoted an issue of its journal to ‘the world view from evolutionary psychology’, demonstrating among other things that the popular media do not have a monopoly on glibness. The preamble to one article announced that according to evolutionists, politicians ‘are simply striving to increase their sexual capital’.[30] It took its cue from the text, in which the evolutionary psychologist Geoffrey Miller asserted that ‘People respond to policy ideas first as big-brained, idea infested, hypersexual primates, and only secondly as concerned citizens in a modern polity’. An evosceptic insists that what is uniquely human comes first; an advocate of evolutionary psychology says we are primates first and human second. And yet it’s not a race, or a zero-sum game in which the social sciences’ loss is Darwinism’s gain.

In its eagerness to do its job and stop the reader turning the page, the preamble to Miller’s piece blurted out what some evolutionary theorists seem to feel but won’t quite say; that in a fundamental sense human affairs really are simple, and that sociobiological explanations for them will suffice. Coupled with a disdain for the Standard Social Sciences Model, this adds up to another claim of priority. Resolving this contest will take more than homilies about the virtues of co-operation and dialogue, since the differences are profound and the debates have a long way to run.

One was forward might involve thinking less categorically about questions and answers. It should be possible to agree that science could form part of a perspective which has more than two dimensions.

6

If there is one thing the study of human evolution has in overwhelming abundance, it is uncertainty. It buzzes with agitation; it is forced, whether it likes it or not, into constantly revolutionizing itself. The exhilarating thing about human origins is that they change so fast.

During the period over which this book was written, at least half a dozen landmark discoveries have been announced, though some may not stand the test of time. From Ethiopia came the news of stone tools two and half million years old, archaeology’s oldest specimens to date. From a German coal-mine, archaeologists unearthed three spears, calculated to be 400,000 years old. No complete spears of such age had been found before, and their tapered design was a striking demonstration of the intelligence that hominids were then capable of applying to their tools. From Slovenia came a perforated bone, with an estimated age range of 43,000 to 82,000 years, that its discoverers argued might have been a flute. If it really was a flute, not just a bone bitten by an animal, then Neanderthals must have been musicians.[31] Last and far from least, an australopithecine skeleton was discovered at Sterkfontein, in South Africa. It was older and more nearly complete than ‘Lucy’, the famous specimen found in Ethiopia in 1973.[32]

Many of the islands of the Indonesian archipelago have at some periods been part of a continuous south-east Asian land mass. Others, like the Wallacean islands east of Bali, have been separated from the mainland by deep water throughout the period of hominid existence. In 1998, the journal Nature published a paper giving dates of 900,000 years for a collection of stone tools found on one of the Wallacean islands, Flores.[33] Up to this point, the oldest evidence that hominids had crossed long stretches of water was the settlement of Australia, estimated to have taken place between 40,000 and 60,000 years ago. If accurate, the dates imply that the south-east Asian hominids had far more sophisticated technological and planning abilities than their simple stone tools suggest.