Massimo Pigliucci

The Nature of Philosophy

How Philosophy Makes Progress and Why It Matters

Introduction — Read This First...

(Some) Scientists against philosophers

Overcoming Philosophy’s PR problem: The next generation

Western vs Eastern(s) philosophies?

A case study: philosophy of science vs science studies

The obvious starting point: the Correspondence Theory of Truth

Progress in science: some philosophical accounts

Progress in science: different philosophical accounts

Progress in mathematics: some historical considerations

History of mathematics: the philosophical approach

Logic: the historical perspective

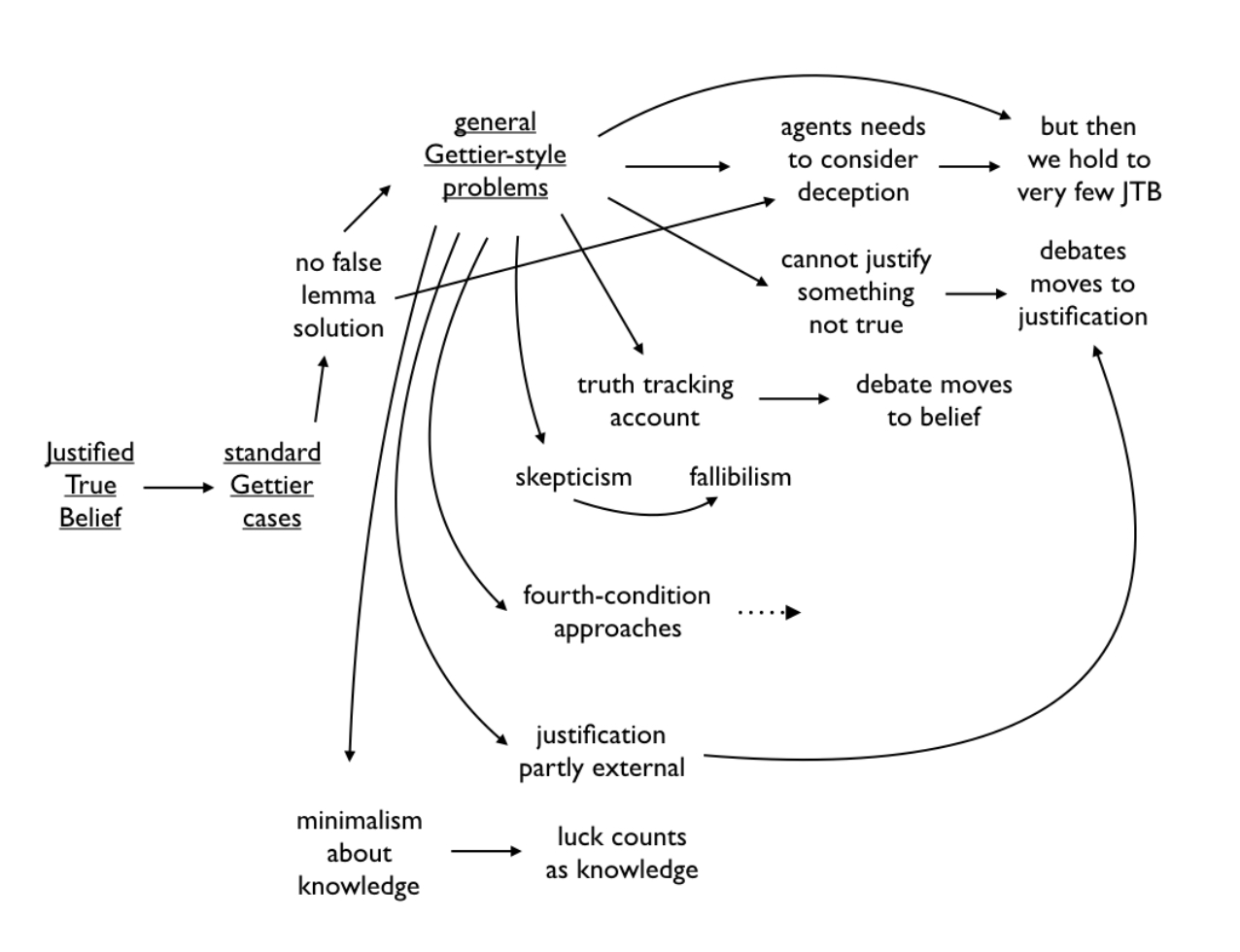

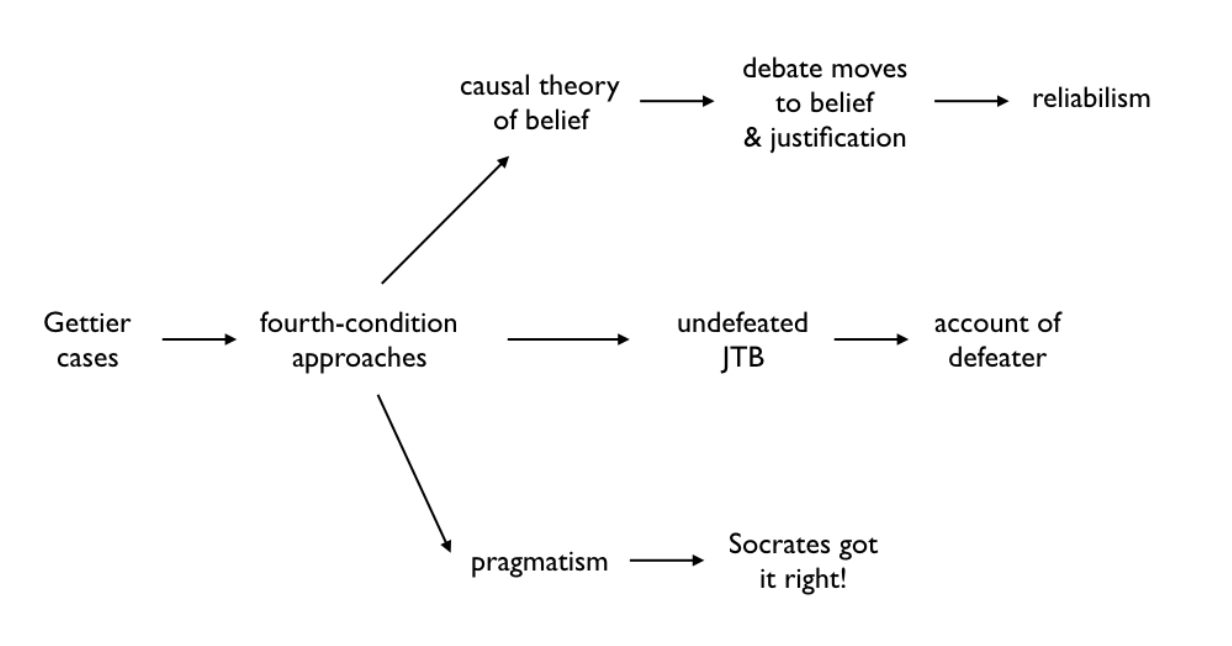

Knowledge from Plato to Gettier and beyond

More, much more, on epistemology

Philosophy of science: forms of realism and antirealism

Ethics: the utilitarian-consequentialist landscape

But is it useful? On the difference between chess and chmess

The Experimental Philosophy challenge

Case Study 1: Morality and concept application

Case study 2: Moral objectivism vs moral relativism

Case Study 4: Phenomenal consciousness

Yet another challenge: the rise of the Digital Humanities

Publisher Details

Massimo Pigliucci, 2016

The Nature of Philosophy — How Philosophy Makes Progress and Why It Matters

By Massimo Pigliucci, K.D. Irani Professor of Philosophy, the City College of New York

Stoa Nova Publications

Cover: Causarum Cognitio (Philosophy) by Raphael, Wikimedia

If you like this free booklet, please consider supporting my writings at Patreon or Medium

Introduction — Read This First...

We are responsible for some things, while there are others for which we cannot be held responsible. (Epictetus)

Readers (including, often, myself) have a bad habit of skipping introductions, as if they were irrelevant afterthoughts to the book they are about to spend a considerable amount of time with. Instead, introductions — at the least when carefully thought out — are crucial reading keys to the text, setting the stage for the proper understanding (according to the author) of what comes next. This introduction is written in that spirit, so I hope you will begin your time with this book by reading it first.

As the quote above from Epictetus reminds us, the ancient Stoics made a big deal of differentiating what is in our power from what is not in our power, believing that our focus in life ought to be on the former, not the latter. Writing this book the way I wrote it, or in a number of other possible ways, is in my power. How people will react to it, is not in my power. Nonetheless, it will be useful to set the stage and acknowledge some potential issues right at the outset, so that any disagreement will be due to actual divergence of opinion, not to misunderstandings.

The central concept of the book is the idea of “progress” and how it plays in different disciplines, specifically science, mathematics, logic and philosophy — which I see as somewhat allied fields, though each with its own crucial distinctive features. Indeed, a major part of this project is to argue that science, the usual paragon for progress among academic disciplines, is actually unusual, and certainly distinct from the other three. And I will argue that philosophy is in an interesting sense situated somewhere between science on the one hand and math and logic on the other hand, at the least when it comes to how these fields make progress.

But I am getting slightly ahead of myself. One would think that progress is easy to define, yet a cursory look at the literature would quickly disabuse you of that hope (as we will appreciate in due course, there is plenty of disagreement over what the word means even when narrowly applied to the seemingly uncontroversial case of science). As it is often advisable in these cases, a reasonable approach is to go Wittgensteinian and argue that “progress” is a family resemblance concept. Wittgenstein’s own famous example of this type of concept was the idea of “game,” which does not admit of a small set of necessary and jointly sufficient conditions in order to be defined, and yet this doesn’t seem to preclude us from distinguishing games from not-games, at least most of the time. In his Philosophical Investigations (1953 / 2009), Wittgenstein begins by saying “consider for example the proceedings that we call ‘games’ ... look and see whether there is anything common to all.” (§66) After mentioning a number of such examples, he says: “And we can go through the many, many other groups of games in the same way; we can see how similarities crop up and disappear. And the result of this examination is: we see a complicated network of similarities overlapping and criss-crossing: sometimes overall similarities.” Hence: “I can think of no better expression to characterize these similarities than ‘family resemblances’; for the various resemblances between members of a family: build, features, colour of eyes, gait, temperament, etc. etc. overlap and criss-cross in the same way. And I shall say: ‘games’ form a family.” (§67) Concluding: “And this is how we do use the word ‘game.’ For how is the concept of a game bounded? What still counts as a game and what no longer does? Can you give the boundary? No. You can draw one; for none has so far been drawn. (But that never troubled you before when you used the word ‘game.’)” (§68)

Progress, then, can be thought of to be like pornography (to paraphrase the famous quip by US Supreme Court Justice Potter Stewart): “I know it when I see it.” But perhaps we can descend from the high echelons of contemporary philosophy and jurisprudence and simply do the obvious thing: look it up in a dictionary. For instance, from the Merriam- Webster we get:

i. “forward or onward movement toward a destination”

ii. or: “advancement toward a better, more complete, or more modern condition”

with the additional useful information that the term originates from the Latin (via Middle English) progressus, which means “an advance” from the verb progredi: pro for forward and gradi for walking.

How is that going to help? I will defend the proposition that progress in science is a teleonomic (i.e., goal oriented) process along definition (i), where the goal is to increase our knowledge and understanding of the natural world. Even though we shall see that there are a lot more complications and nuances that need to be discussed in order to agree with that general conclusion, I believe this captures what most scientists and philosophers of science mean when they say that science, unquestionably, makes progress.

Definition (ii), however, is more akin to what I think has been going on in mathematics, logic and (with an important qualification to be made in a bit), philosophy. Consider first mathematics (and, by similar arguments, logic): since I do not believe in a Platonic realm where mathematical and logical objects “exist” in any meaningful, mind-independent sense of the word (more on this later), I therefore do not think mathematics and logic can be understood as teleonomic disciplines (fair warning to the reader, however: many mathematicians and a number of philosophers of mathematics do consider themselves Platonists). Which means that I don’t think that mathematics pursues an ultimate target of truth to be discovered, analogous to the mapping on the kind of external reality that science is after. Rather, I think of mathematics (and logic) as advancing “toward a better, more complete” position, “better” in the sense that the process both opens up new lines of internal inquiry (mathematical and logical problems give origin to new — internally generated — problems) and “more complete” in the sense that mathematicians (and logicians) are best thought as engaged in the exploration of what throughout the book I call a space of conceptual (as distinct from empirical) possibilities.

How do we cash out this idea of a space of conceptual possibilities? And is such a space discovered or invented? During the first draft of this book I was only in a position to provide a sketched, intuitive answer to these questions. But then I came across Peter Unger and Lee Smolin’s The Singular Universe and the Reality of Time: A Proposal in Natural Philosophy (2014), where they provide what for me is a highly satisfactory answer in the context of their own discussion of the nature of mathematics. Let me summarize their arguments, because they are crucial to my project as laid out in this book.

In the second part of their tome (which was written by Smolin, Unger wrote the first part), Chapter 5 begins by acknowledging that some version of mathematical Platonism — the idea that “mathematics is the study of a timeless but real realm of mathematical objects,” is common among mathematicians (and, as I said, philosophers of mathematics), though by no means universal, and certainly not uncontroversial. The standard dichotomy here is between mathematical objects (a term I am using loosely to indicate any sort of mathematical construct, from numbers to theorems, etc.) being discovered (Platonism) vs being invented (nominalism and similar positions: Bueno 2013).

Smolin immediately proceeds to reject the above choice as an example of false dichotomy: it is simply not the case that either mathematical objects exist independently of human minds and are therefore discovered, or that they do not exist prior to our making them up and are therefore invented. Smolin presents instead a table with four possibilities:

| “Discovered”: prior existence | rigid properties |

| “Fictional”: prior existence | non-rigid properties |

| “Evoked”: no prior existence | rigid properties |

| “Invented”: no prior existence | non-rigid properties |

By “rigid properties” here Smolin means that the objects in question present us with “highly constrained” choices about their properties, once we become aware of such objects. Let’s begin with the obvious entry in the table: when objects exist prior to humans thinking about them, and they have rigid properties. All scientific discoveries fall into this category: planets, say, exist “out there” independently of anyone being able to verify this fact, so when we become capable of verifying their existence and of studying their properties we discover them.

Objects that had no prior existence, and are also characterized by no rigid properties include, for instance, fictional characters (Smolin calls them “invented”). Sherlock Holmes did not exist until the time Arthur Conan Doyle invented (surely the appropriate term!) him, and his characteristics are not rigid, as has been (sometimes painfully) obvious once Holmes got into the public domain and different authors could pretty much do what they wanted with him (and I say this as a fan of both Robert Downey Jr. and Benedict Cumberbatch). Smolin, unfortunately, doesn’t talk about the “fictional” category of his classification, which comprises objects that had prior existence and yet are not characterized by rigid properties. Perhaps some scientific concepts, such as that of biological species, fall into this class: “species,” however one conceives of them, certainly exist in the outside world; but how one conceives of them (i.e., what properties they have) may depend on a given biologist’s interests (this is referred to as pluralism about species concepts in the philosophy of biology: Mishler & Donoghue 1982).

The crucial entry in the table, for our purposes here, is that of “evoked” objects: “Why could something come to exist, which did not exist before, and, nonetheless, once it comes to exist, there is no choice about how its properties come out? Let us call this possibility evoked. Maybe mathematics is evoked” (Unger and Smolin, 2014, 422). Smolin goes on to provide an uncontroversial class of evocation, and just like Wittgenstein, he chooses games: “For example, there are an infinite number of games we might invent. We invent the rules but, once invented, there is a set of possible plays of the game which the rules allow. We can explore the space of possible games by playing them, and we can also in some cases deduce general theorems about the outcomes of games. It feels like we are exploring a pre-existing territory as we often have little or no choice, because there are often surprises and incredibly beautiful insights into the structure of the game we created. But there is no reason to think that game existed before we invented the rules. What could that even mean?” (p. 422)

Interestingly, Smolin includes forms of poetry and music into the evoked category: once someone invented haiku, or the blues, then others were constrained by certain rules if they wanted to produce something that could reasonably be called haiku poetry, or blues music. An obvious example that is very close to mathematics (and logic) itself is provided by board games: “When a game like chess is invented a whole bundle of facts become demonstrable, some of which indeed are theorems that become provable through straightforward mathematical reasoning. As we do not believe in timeless Platonic realities, we do not want to say that chess always existed — in our view of the world, chess came into existence at the moment the rules were codified. This means we have to say that all the facts about it became not only demonstrable, but true, at that moment as well ... Once evoked , the facts about chess are objective, in that if any one person can demonstrate one, anyone can. And they are independent of time or particular context: they will be the same facts no matter who considers them or when they are considered” (p. 423).

This struck me as very powerful and widely applicable. Smolin isn’t simply taking sides in the old Platonist / nominalist debate about the nature of mathematics. He is significantly advancing that debate by showing that there are two other cases missing from the pertinent taxonomy, and that moreover one of those cases provides a positive account of mathematical (and similar) objects, rather than just a rejection of Platonism. But in what sense is mathematics analogous to chess? Here is Smolin again: “There is a potential infinity of formal axiomatic systems (FASs). Once one is evoked it can be explored and there are many discoveries to be made about it. But that statement does not imply that it, or all the infinite number of possible formal axiomatic systems, existed before they were evoked. Indeed, it’s hard to think what belief in the prior existence of a FAS would add. Once evoked, a FAS has many properties which can be proved about which there is no choice — that itself is a property that can be established. This implies there are many discoveries to be made about it. In fact, many FASs once evoked imply a countably infinite number of true properties, which can be proved” (p. 425).

Reflecting on the category of evoked objective truths provided me with a reading key to make sense of what I was attempting to articulate: my suggestion here, then, is that Smolin’s account of mathematics applies, mutatis mutandis (as philosophers are wont to say) to logic and, with an important caveat, to philosophy. All these disciplines — but, crucially, not science — are in the business of ascertaining “evoked,” objective truths about their subject matters, even though these truths are neither discovered (in the sense of corresponding to mind independent states of affairs in the outside world) nor invented (in the sense of being (entirely) arbitrary constructs of the human mind).

I have referred twice already to the idea that philosophy is closer to mathematics and logic (and a bit further from science) via a qualification. That qualification is that philosophy is, in fact, concerned directly with the state of the world (unlike mathematics and logic, which while very useful to scientists, could be, and largely are, pursued without any reference whatsoever to how the world actually is). If you are doing ethics, or political philosophy, for instance, you are very much concerned with those aspects of the world that deal with interactions among humans within the context of their societies. If you are doing philosophy of mind you are ultimately concerned with how actual human (and perhaps artificial) brains work and generate consciousness and intelligence. Even if you are a metaphysician — engaging in what is arguably the most abstract field of philosophical inquiry — you are still trying to provide an account of how things hang together, so to speak, in the real cosmos. This means that the basic parameters that philosophers use as their inputs, the starting points of their philosophizing, their equivalent of axioms in mathematics and assumptions in logic (or rules in chess) are empirical data about the world. This data comes from both everyday experience (since the time of the pre-Socratics) and of course increasingly from the world of science itself. Philosophy, I maintain, is in the business of exploring the sort of conceptually evoked spaces that Smolin is talking about, but the evocation is the result of whatever starting assumptions are made by individual philosophers working within a particular field and, crucially, of the constraints that are imposed by our best understanding of how the world actually is.

I hope it is clear from the above analysis that I am not suggesting that every field that can be construed as somehow exploring a conceptual space ipso facto makes progress. If that were the case, we would be forced to say that pretty much everything humans do makes progress. Consider, for instance, fiction writing. Specifically, imagine a science fiction author who writes three books about the same planet existing in three different “time lines.” [1] In each book, the geography of the planet is different, which leads to different evolutionary paths for its inhabitants. However, each description is constrained by the laws of physics (he wants to keep things in accordance with those laws), by some rational principles (the same object can’t be in two places, as that would violate the principle of non-contradiction), and perhaps even by certain aesthetic principles. Each book tells a different story, constrained both empirically (laws of physics), and logically. In a sense, this writer would be exploring different conceptual spaces, by describing different possibilities unfolding on the fictitious planet. However, I do not think that we want to say that he is making progress. He is just exploring various imaginary worlds. The difference with philosophy, then, is twofold: i) our writer is doing what Smolin calls “inventing”: his worlds did not have prior existence to his imagining them, and they have no rigid properties. Even the constraints he imposes from the outset, both empirical and logical, could have been otherwise. He could have easily imagined planets where both the laws of physics and those of logic are different. Philosophy, I maintain, is in the business of doing empirically-informed evoking, not inventing, which means that its objects of study have rigid properties. ii) Philosophy, again, is very much concerned with the world as it is, not with arbitrarily invented ones. Even when philosophers venture into thought experiments, or explore “possible worlds” they do so with an interest to figure things out as far as this world is concerned. So, no, I am not suggesting that every human activity makes progress, nor that philosophy is like literature.

There are two additional issues I want to take up right at the beginning of this book, though they will reappear regularly throughout the volume. They both, I think, contribute to much confusion and perplexity whenever the topic of progress in philosophy comes up for discussion. The first issue is that philosophers too often use the word “theory” to refer to what they are doing, while in fact our discipline is not in the business of producing theories — if by that one means complex and testable explanations of how the world works. The word “theory” immediately leads one to think of science (though, of course, there are mathematical theories too). In light of what I have just argued about the teleonomic nature of scientific progress contrasted with the exploratory / qualificatory nature of philosophical inquiry, one can see how talking about philosophical “theories” may not be productive. Philosophers do have an alternative term, which gets used quite often interchangeably with “theory”: account. I much prefer the latter, and will make an effort to drop the former altogether. “Account” seems a more appropriate term because philosophy — the way I see it — is in the business of clarifying things, or analyzing in order to bring about understanding, not really discovering new facts, but rather evoking rational conclusions arising from certain ways of looking at a given problem or set of facts.

The second issue is a way to concede an important point to critics of philosophy (which include a number of scientists and, surprisingly, philosophers themselves). I am proposing a model of philosophical inquiry conceived as being in the business of providing accounts of evoked truths by exploring and refining our understanding of a series of conceptual landscapes. But it is true that such refinement can at some point begin to yield increasingly diminishing returns, so that certain discussion threads become more and more limited in scope, ever more the result of clever logical hair splitting, and of less and less use or interest to anyone but a vanishingly small group of professionals who, for whatever reason, have become passionate about it. A good example of this, I think, is the field of “gettierology,” which has resulted from discussions on the implications of a landmark (very short) paper published by Edmund Gettier back in 1963, a paper that for the first time questioned the famous concept of knowledge as justified true belief often attributed (with some scholarly disagreement) to Plato. We will examine Gettier’s paper and its aftermath as an example of progress in philosophy later on, but it has to be admitted that more than half a century later pretty much all of the interesting things that could have possibly been said in response to Gettier are likely to have been said, and that ongoing controversies on the topic lack relevance and look increasingly self-involved.

However, I will also immediately point out that this problem isn’t specific to philosophy: pretty much every academic field — from literary criticism to history, from the social sciences to, yes, even the natural sciences — suffer from the same malaise, and examples are not hard to find. I spent a large amount of my academic career as an evolutionary biologist, and I cannot vividly enough convey the sheer boredom at sitting through yet another research seminar when someone was presenting lots of data that simply confirmed once again what everyone already knew, except that the work had been carried out on a species of organisms for which it hadn’t been done before. Since there are (conservatively) close to nine million species on our planet, you can see the potential for endless funding and boundless irrelevancy. At the least philosophical scholarship is very cheap by comparison with even the least expensive research program in the natural sciences!

Before concluding this overview and inviting you to plunge into the main part of the book, let me briefly discuss some of the surprisingly few papers written by philosophers over the years that explicitly take up the question of progress in their field, as part of scholarship in so-called “metaphilosophy.” I have chosen three of these papers as representative of the (scant) available literature: Moody (1986), Dietrich (2011) and Chalmers (2015). [2] The first one claims that there is indeed progress in philosophy, though with important qualifications, the second one denies it (also with crucial caveats), and the third one takes an intermediate position.

Moody (1986) distinguishes among three conceptions of progress: what he calls Progress-1 takes place when there is a specifiable goal about which people can agree that it has been achieved, or what counts towards achieving it. If you are on a diet, for instance, and decide to lose ten pounds, you have a measurable specific goal, and you can be said to make progress insofar your weight goes down and approaches the specific target (and, of course, you can also measure your regress, should your weight go further up!). Progress-2 occurs when one cannot so clearly specify a goal to be reached, and yet an individual or an external observer can competently judge that progress (or regress) has occurred when comparing the situation a time t vs the situation at time t+1, even though the criteria by which to make that judgment are subjective. Moody suggests, for example, that a composer guided by an inner sense of when they are “getting it right” would be making this sort of progress while composing. Finally, Progress-3 is a hybrid animal, instantiated by situations where there are intermediate but not overarching goals. Interestingly, Moody says that mathematics makes Progress-3, insofar as there is no overall goal of mathematical scholarship, and yet mathematicians do set intermediate goals for themselves, and the achievement of these goals (like the proof of Fermat’s Last Theorem) are recognized as such by the mathematical community. (Moody says that science too makes Progress-3, although as we have discussed before, science actually does have an ultimate, specifiable goal: understanding and explaining the natural world. So I would rather be inclined to say that science makes Progress-1, within Moody’s scheme.)

Moody’s next step is to provisionally assume that philosophy is a type of inquiry, and then ask whether any of his three categories of progress apply to it. The first obstacle is that philosophy does not appear to have consensus-generating procedures such as those found in the natural sciences or in technological fields like engineering. So far so good for my own account given above, since I distinguish progress in the sciences from progress in other fields, particularly philosophy. Moody claims (1986, 37) that “the only thing that philosophers are likely to agree about with enthusiasm is the abysmal inadequacy of a particular theory.” While I think that is actually a bit too pessimistic (we will see that philosophers agree — as a plurality of opinions — on much more than they are normally given credit for), I do not share Moody’s pessimistic assessment of that observation: negative progress, i.e., the elimination of bad ideas, is progress nonetheless. Interestingly, Moody remarks (again, with pessimism that is not warranted in my mind) that in philosophy people talk about “issues” and of “positions,” not of the scientific equivalent “hypotheses” and “results.” I think that is because philosophy is not sufficiently akin to science for the latter terms to make sense within discussions of philosophical inquiry.

Moody soon concludes that philosophy does not make Progress-1 or Progress-3, because its history has not yielded a trail of solved problems. What about Progress-2? Here the discussion is interesting though somewhat marginal to my own project. Moody takes up the possibility that perhaps philosophy is not a type of inquiry after all, and analyzes in some detail two alternative conceptions: Wittgenstein’s (1965) idea of philosophy as “therapy” and Richard Rorty’s (1980) so-called “conversational model” of philosophy. As Moody (1986, 38) magisterially summarizes it: “Wittgenstein believed that philosophical problems are somehow spurious and that the activity of philosophy ... should terminate with the withdrawal, or deconstruction, of philosophical questions.” On this view, then, there is progress, of sorts, in philosophy, but it is the sort of “terminus” brought about by committing seppuku. As Moody rather drily comments, while nobody can seriously claim that Wittgenstein’s ideas have not been taken seriously, it is equally undeniable that philosophy has largely gone forward pretty much as if the therapeutic approach had never been articulated. If a proposed account of the nature of philosophy has so blatantly been ignored by the relevant epistemic community, we can safely file it away for the purposes of this book.

Rorty’s starting point is what he took to be the (disputable, in my opinion) observation that philosophy has failed at its self-appointed task of analysis and criticism. Moody quotes him as saying (1986, 39): “The attempts of both analytic philosophers and phenomenologists to ‘ground’ this and ‘criticize’ that were shrugged off by those whose activities were purportedly being grounded and criticized.” Rorty arrived at this because of his rejection of what he sees as philosophy’s “hangover” from the 17th and 18th centuries, when philosophers were attempting to set their inquiry within a framework that allowed a priori truths to be discovered (think Descartes and Kant), even though David Hume had dealt that framework a fatal blow already in the 18th century.

While Moody finds much of Rorty’s analysis on target, I must confess that I don’t, even though it does have some value. For instance, the fact that other disciplines (like science) marched on while refusing to be grounded or criticized by philosophy is neither entirely true (lots of scientists have paid and still pay a significant amount of attention to philosophy of science, for instance) nor should it necessarily be taken as the ultimate test of the value of philosophy even if true: creationists and climate change denialists, after all, shrug off any criticism of their positions, but that doesn’t make such criticism invalid, or futile for that matter (since others are responding to it). Yet, there is something to be said for thinking of philosophy as a “conversation” more than an inquiry, as Rorty did. The problem is that this and other dichotomies presented to us by Rorty are, as Moody himself comments, false: “we do not have to choose between ‘saying something,’ itself a rather empty notion that manages to say virtually nothing, and inquiring, or between ‘conversing’ and ‘interacting with nonhuman reality.’” Indeed we don’t.

But what account, then, can we turn to in order to make sense of progress in philosophy, according to Moody? I recommend the interested reader check Moody’s discussion of Robert Nozick’s (1981) “explanational model” of philosophy, as well as of John Kekes’ (1980) “perennial problems” approach, but my own treatment here will jump to Nicholas Rescher (1978) and the concept of “aporetic clusters,” which is one path that supports the conclusion — according to Moody — that philosophy does make progress, and it is a type-2 progress. Rescher thinks that it is unrealistic to expect consensus in philosophy, and yet does not see this as a problem, but rather as a natural outcome of the nature of philosophical inquiry (1986, 44): “in philosophy, supportive argumentation is never alternative-precluding. Thus the fact that a good case can be made out for giving one particular answer to a philosophical question is never considered as constituting a valid reason for denying that an equally good case can be produced for some other incompatible answers to this question.”

In fact, Rescher thinks that philosophers come up with “families” of alternative solutions to any given philosophical problem, which he labels aporetic clusters. [3] According to this view, some philosophical accounts are eliminated, while others are retained and refined. The keepers become philosophical classics, like “virtue ethics,” “utilitarianism” or “Kantian deontology” in ethics, or “constructive empiricism” and “structural realism” in philosophy of science. Rescher’s view is not at all incompatible with my idea of philosophy as evoking (to use the terminology introduced earlier), and then exploring and refining, peaks in conceptual landscapes. As Moody (1986, 45) aptly summarizes it: “that there are ‘aporetic clusters’ is evidence of a kind of progress. That the necrology of failed arguments is so long is further evidence.”

A very different take on all of this is what we get from the second paper I have selected to get our feet wet for our exploration of progress in philosophy and allied disciplines, the provocatively titled “There is no progress in philosophy,” by Eric Dietrich. The author does not mince words (to be sure, a professional hazard in philosophy, to which I am not immune myself), even going so far as diagnosing people who disagree with his contention that philosophy does not make progress with a mental disability, which he labels “anosognosia” “[a] condition where the affected person denies there is any problem.” I guess the reader should be warned that, apparently, I do suffer from anosognosia.

Dietrich begins by arguing that philosophy is in a relevant sense like science. Specifically, he draws a parallel between strong disagreements among philosophers on, say, utilitarianism vs deontology in ethics, with similarly strong, and lost lasting, disagreements among scientists about issues like group selection in evolutionary biology, or quantum mechanics during the early part of the 20th century. But, Dietrich then adds, philosophy is also relevantly dissimilar from science: scientific disagreements eventually get resolved and the enterprise lurches forward (every physicist nowadays accepts quantum mechanics, having abandoned Einstein’s famous skepticism about it — though this hasn’t happened yet for group selection, it must be pointed out). Philosophical disagreements, instead, have been more or less the same for 3000 years. Conclusion: philosophy does not make progress, it just “stays current,” meaning that it updates its discussions with the times (e.g., today we debate ethical questions surrounding gay rights, not those concerning slavery, as the latter is irrelevant, at the least in many parts of the world).

Dietrich acknowledges that modern philosophy contains many new notions, and lists a number of them (e.g., supervenience, possible worlds, and modal logic). But immediately dismisses the suggestion that these may represent advances in philosophical discourse as “lame.” His evidence is that there is no widespread agreement about any of these notions, so their introductions cannot possibly be seen as advances. It follows that those philosophers who insist in defending their field in this fashion are affected by the above mentioned mental condition.

The reader will have already seen that Dietrich’s point is actually well countered by the preceding discussion, and particularly the explanation put forth by Rescher for why we see aporetic clusters of positions in philosophy. I will develop my own rejection of Dietrich’s sweeping conclusion in terms of non-teleonomic progress instantiated as exploration and refinement of a series of conceptual spaces throughout much of this book. And I will present (empirical!) evidence that philosophers are more in agreement on a wide range of issues than Dietrich and others acknowledge, though the agreement is about the viability of different positions within a given aporetic cluster, not about a single “winning” theory — which makes sense once we conceptualize philosophy in the manner introduced above and to be developed in the following chapters.

But even simply considering Dietrich’s own examples, it is hard to see where exactly he gets the idea that there is overwhelming disagreement: I don’t know of logicians who differ on the validity of modal logic, though of course they will deploy it differently in pursuit of their own specific goals. Nor do I know of anyone who disagrees on the concepts of supervenience or possible worlds, though people do reasonably disagree on what such concepts entail vis-a-vis a number of specific philosophical questions. Dietrich makes his argument in part by way of a thought experiment in which he brings Aristotle back to life and has him attend a couple of college courses: he imagines the Greek finding himself astonished and bewildered in a class on basic physics, but very much at ease in a class in logic or metaphysics (all three subjects, of course, on which Aristotle had a great deal to write, 23 centuries ago). My own intuition, however, is a bit different (we will come back to the use and misuse of intuitions and thought experiments in philosophy). While I agree that Aristotle wouldn’t know what to make of quantum mechanics and general relativity, he would have a lot of catching up to do in order to understand modern logics (plural, as there is an ample variety of them), and even in metaphysics he would have to take at least a remedial course before jumping in with both feet (not to mention that he wouldn’t know what the name of the discipline refers to, since it was adopted after his death).

Dietrich then moves on to introduce another mental illness, apparently affecting a much smaller number of philosophers: nosognosia, a condition under which the patient knows that there is something wrong, but still has some trouble fully accepting the implications. He discusses two such philosophers: Thomas Nagel (1986) and Colin McGinn (1993). Both Nagel and McGinn conclude that philosophical problems are intractable, and, hence, that there is no such thing as philosophical progress. However, they arrive at this conclusion by different routes. For Nagel this is because of an irreconcilable conflict between first (subjective) and third (objective) person accounts. While science deals with the latter, philosophy has to tackle both, and this creates contradictions that cannot be overcome. Here is Dietrich’s summary of Nagel’s view (2011, 339):

“There are three points of view. From the subjective view, we get one set of answers to philosophy questions, and from the objective view, we get another, usually contradictory, set, and from a third view, from which one can see the answers of both the subjective and objective views, one can see that the subjective and objective answers are equally valid and equally true. Therefore, philosophy problems are intractable. Philosophy cannot progress because it cannot solve them.”

McGinn, instead, says that there are answers to philosophical problems, but these — for some mysterious reason — are beyond human reach. Again, Dietrich’s summary (2011, 339):

“There are two relevant points of view. From one, the human view, philosophy problems are intractable. From the other, the alien view, philosophy problems are tractable (perhaps even trivial). The situation here is exactly like the situation with dogs and [the] English [language]. We easily understand it. Dogs understand only a tiny number of words, and seem to know nothing of combinatorial syntax. Therefore, though it is unlikely we can solve any philosophy problems, they are not inherently intractable.”

Briefly, I think both Nagel and McGinn are seriously mistaken — and I believe most philosophers agree, as testified by the straightforward observation that few seem to have stopped philosophizing as a result of considering these (well known) arguments.

Nagel has made a similar claim about the incompatibility of first and third person descriptions before, specifically in philosophy of mind (indeed, we will shortly discuss his classic paper on what it is like to be a bat). But that alleged incompatibility is more simply seen as two different types of descriptions of certain phenomena, descriptions that do not have to be incompatible, and yet that are not reducible to each other. Briefly, the fact that I feel pain (first person, subjective description) and that a neuroscientist will say that my C- fibers have fired (third person, objective description) are both true statements; they are compatible (indeed, I feel pain because my C-fibers are firing, as demonstrated by the fact that if I chemically inhibit that neurological mechanism I thereby cease to feel pain); and they are best understood, respectively, as an experience vs an explanation. But experiences don’t (have to) contradict explanations, assuming that the latter are at the least approximately true. A fortiori, I would like to see a good example of a philosophical problem that necessarily leads to incompatible treatments when tackled from either perspective. I do not think such a thing exists.

McGinn’s position is, quite simply, empty. While the analogy between the advanced understanding of an alien race vs our own primitive capacities and the similar difference between how dogs and humans understand English may seem compelling, there is no independent reason to think that philosophical problems are intractable by the human mind. Indeed, they have been tackled over the course of centuries, and we will see that progress has been made (once we understand “progress” in the way sketched above and to be further unpacked throughout the book). Interestingly, McGinn too, like Nagel before him, applies his approach to philosophy of mind, where he claims that the problem of consciousness cannot be resolved because we are just not smart enough. This “mysterian” position, as it is known, may be correct for all I know, but it doesn’t seem to lead us anywhere.

Similarly, where does Dietrich’s contemptuous rejection of the very idea of philosophical progress lead him? Nowhere, as far as I can see. He concludes by quoting Wittgenstein from the Tractatus: “My propositions are elucidatory in this way: he who understands me finally recognizes them as senseless, when he has climbed out through them, on them, over them. (He must so to speak throw away the ladder, after he has climbed up on it.) What we cannot speak about we must pass over in silence.” And yet, again, philosophy has persisted in existing as a field (I would be so bold as say, in moving forward!) despite Wittgenstein, and I greatly suspect will do much the same despite Dietrich’s cynicism.

A few comments now on Chalmer’s (2015) contribution to the question of progress, or lack thereof, in philosophy. He stakes a reasonable intermediate position, acknowledging that philosophy clearly has made progress, but asking why it hasn’t achieved more. He arrives at the first conclusion by a number of ways, including noting the incontrovertible fact that, for instance, the works of highly notable philosophers like Plato, Aristotle, Hume, Kant, Frege, Russell, Kripke, and Lewis have clearly been progressive with respect to the thinkers that preceded them, no matter what one’s conception of “progress” happens to be. Chalmers goes on to briefly discuss a number of way in which philosophy has, in fact, made progress: there has been convergence on some of the big questions (e.g., most professional philosophers are atheists and physicalists about mind), as well as some of the smaller ones (e.g., knowledge is not simply justified true belief, conditional probabilities are not probabilities of conditionals).

Still, maintains Chalmers, the progress that philosophy has made is slow and small in comparison to that of the natural (but not, he argues, the social) sciences. He discusses some possible explanations for this difference between philosophy and science, including: “disciplinary speciation,” the fact that new disciplines spin off philosophy precisely when they do begin to make sustained progress, like physics, psychology, economics, linguistics, and so on; “anti-realism,” the idea that certain areas of philosophy do not converge on truth because there is no such truth to be found (e.g., moral philosophy); “verbal disputes,” the Wittgensteinian point that at the least some debates in philosophy are more about using language at cross-purposes than about substantive differences (e.g., free will); “greater distance from the data,” meaning that for some reasons philosophy operates nearer the periphery of Quine’s famous web of beliefs (more on this in the next chapters); “sociological explanations,” where some positions become dominant, or recede in the background, because of the influence of individual philosophers within a given generation (e.g., the unpopularity of the analytic-synthetic distinction during Quine’s active academic career); “psychological explanations,” in the sense that individual philosophers may be more or less prone to endorse certain positions as a result of their character and other psychological traits; and “evolutionary explanations,” the contention that perhaps our naturally evolved minds are smart enough to pose philosophical questions but not to answer them.

Chalmers’ conclusion is that there may be some degree of truth to all seven explanations, but that they do not provide the full picture, in part because some of them apply to other fields as well: it’s not like natural scientists don’t have their own sociological and psychological quirks to deal with, and we may not be smart enough to settle philosophical questions, but we do seem smart enough to develop quantum mechanics and to solve Fermat’s Last Theorem. I think he is mostly on target, but I also think that the missing part of the explanation in his analysis derives from a crucial assumption that he made and that I will reject throughout this book: philosophy is simply not in the same sort of business as the natural sciences, so any talk of direct comparison in terms of progress and truths at the least partially misses the point. Right at the beginning of his paper Chalmers states: “The measure of progress I will use is collective convergence to the truth. The benchmark I will use is comparison to the hard sciences.” This is precisely what I will not do here, though it will take a bit to articulate and defend why.

A final parting note, in the spirit of Introductions as reading keys to one’s book. Friends and reviewers have of course commented on what you are about to read. Some of them found me too critical of, say, the continental approach to philosophy. Others, predictably, found me not critical enough. Some people thought parts of the book are too difficult for a generally educated reader (true), while other people thought some parts would be too obvious to a professional philosopher (also true). This was by design: I am writing with multiple audiences in mind, and I never believed one has to get one hundred percent of the references or arguments in a book in order to enjoy or learn from it (try to read the above quoted Wittgenstein that way and see how far you get, even as a professional philosopher — and there are much more blatant examples available). And of course the complaint has reasonably been raised that I don’t go into the proper degree of depth on a number of important technical issues in philosophy of science, of mathematics, of logic, and of philosophy itself (i.e., in meta-philosophy). Again, true. But what you are about to read is not meant as, nor could it possibly be, either an encyclopedia on philosophical thought or a set of simultaneous original contributions to many of the sub-specialties and specific issues I touch on. Rather, the goal is to pull together, the best I can, what a number of excellent thinkers have said on a variety of issues, connecting them into an overarching narrative that can provide a preliminary, organic stab at the question at the core of the book: does philosophy make progress, and if so, in which sense? I hope that that is justification enough for what you are about to read. And I am confident that better thinkers than I will soon make further progress down this road.

There are, of course, a number of people to whom I am grateful, either for reading drafts of this book (in toto or in part), or for having influenced what I am trying to do here as a result of our discussions. Among these are some of my colleagues at the City University of New York’s Graduate Center, particularly Graham Priest (for discussions about the nature of logic), Jesse Prinz (for discussions about the nature of everything, but particularly science), and Roberto Godfrey-Smith (on the nature of science and specifically biology). Leonard Finkleman is one of those who have read the book in its entirety, an effort for which I will be forever grateful. Thanks also to Dan Tippens for specific comments on two chapters (on progress in mathematics & logic, and in philosophy). Elizabeth Branch Dyson, at Chicago Press, has been immensely patient with my revisions of the original manuscript, not to mention as encouraging as an editor could possibly be (and has kindly agreed to finally publish the whole shebang in the form you are reading). I would also like to thank Patricia Churchland and Elliot Sober for the initial support when this project was at the stage of a proposal, as well as two anonymous reviewers for their severe, but obviously well intentioned, criticisms of previous drafts.

—Massimo Pigliucci

New York, Summer 2016

1. Philosophy’s PR Problem

“Philosophy is dead.”

(Stephen Hawking)

However one characterizes the discipline of philosophy, there is little doubt that it has been suffering for a while from a severe public relation problem, and it is incumbent on all interested parties (beginning with professionals in the field) not just to ask themselves why, but also what can be done to improve the situation. This chapter is offered as a series of reflections on these two aspects of the issue. We will examine in some detail a series of representative examples of brash attacks on philosophy (to which I will offer my own, shall we say, blunt response), mostly carried out by a small but influential number of scientists and science writers, attacks that seem to capture something fundamental about the broader public’s attitude toward the discipline. As a scientist myself, I think I am unusually positioned to understand some of my colleagues’ take on philosophy. We will also see, however, that there is a number of prominent philosophers who have had the unfortunate effect of contributing to the problem by writing questionable things about science, thus somewhat justifying the backlash from the other side. Finally, we will look at how — despite the obliviousness of, and sometimes even objections and resistance by, most professional philosophers — the field has been making significant inroads in public discourse, ironically by essentially following the example set forth by science popularizing.

(Some) Scientists against philosophers

Science, as is well understood, is one of philosophy’s intellectual offsprings, and was in fact known until the mid-19th century as “natural philosophy.” The very word “scientist” was invented in 1833 by philosopher William Whewell, apparently at the prompting of the poet Samuel Taylor Coleridge (Snyder 2006). There are very good historical and conceptual reasons for the weaning to have occurred (Bowler and Morus, 2005; Lindberg 2008), a process that eventually led to the professionalization of academic science, which dramatically accelerated after World War II with the establishment (in the United States and elsewhere) of government sources of funding for scientific research. Given that the separation of the two fields has occurred slowly and — initially at least — amicably (both Descartes and Newton, for instance, considered themselves natural philosophers), one would expect each side to eventually go on with their business and leave the other to pursue its own. The reality is a bit more complicated than that.

As we shall see in a couple of chapters, some philosophers, especially analytical ones, do seem to suffer from a degree of “lab coat envy,” so to speak, which nudges them to concede perhaps a bit too much epistemic territory to science. At the opposite extreme, we have also had episodes of philosophers lashing out at science, often for egregiously bad reasons, thereby generating a (frequently scornful) reaction from the scientists themselves. It seems therefore appropriate to begin with an examination of what some vocal and prominent exponents of modern day natural philosophy have had to say recently about their mother discipline, and why they have so frequently and reliably missed the mark. Be forewarned: going through this section will increasingly feel like seeing some dirty intellectual laundry being aired in public. But I think it is a necessary preamble to my argument, and at any rate the pronouncements I will focus on and criticize below are already out there for anyone to see and make up their own mind about.

A good starting point, which I will discuss in detail since it is so paradigmatic, is a famous essay by Nobel winning physicist Steven Weinberg, boldly entitled “Against Philosophy” (Weinberg 1994). The question Weinberg poses right at the end of the first paragraph of his essay, “Can philosophy give us any guidance toward a final theory?” is at once typical of physicists’ complaints about the discipline, and clearly shows why they are misguided. While there are a number of good examples of fruitful collaborations between scientists and philosophers, from evolutionary biology to quantum theory, the major point of philosophy of science (Weinberg's main target) is most emphatically not to solve scientific (i.e., empirical) questions. We’ve got science for that, and it works very nicely.

Weinberg immediately tries to take some of the sting out of his overture by acknowledging the general value of philosophy, “much of which has nothing to do with science,” but immediately springs back to slinging mud within the same paragraph: “philosophy of science, at its best seems to me a pleasing gloss on the history and discoveries of science.” There are various aims of philosophy of science (Pigliucci 2008), only some of which have to do with helping physicists formulate new theories about the ultimate structure of the universe (or biologists to formulate new theories of evolution, and so on). Broadly speaking, philosophers of science are interested in three major areas of inquiry. The first deals with the generation of general theories of how science works, as in Popper’s (1963) ideas about falsificationism, or Kuhn’s (1963) concept of scientific revolutions and paradigm shifts. Second, philosophers of particular sciences (such as physics, biology, chemistry, etc.) are interested in the logic underlying the practice of the various subdivisions of the scientific enterprise, debating the use (and sometimes misuse) of concepts such as those of species (in biology: Wilkins 2009) or wave function (in physics: Krips 1990). Finally, philosophy of science may serve as an external mediator and sometimes critic of the social implications of scientific findings, as for instance in the case of the complex evidential and ethical issues raised by human genetic research (Kaplan 2000). While some of the above should be useful to working scientists, most of it is an exercising in studying science from the outside, not of practicing science itself, thus making Weinberg’s demand for direct help from philosophers in theoretical physics rather odd.

It is also not difficult to find outright misreadings of the philosophical literature in “Against Philosophy.” For instance, at one point Weinberg cites Wittgenstein in support of his thesis that philosophy is irrelevant to science: “Wittgenstein remarked that ‘nothing seems to me less likely than that a scientist or mathematician who reads me should be seriously influenced in the way he works.’” But this should be read in context (admittedly, not an easy task, when it comes to Wittgenstein), as the author was actually making an argument for the independence of philosophy from science and for the simultaneous deflating of the latter, and was definitely not talking about philosophy of science. Weinberg then comes perilously close to anti-intellectualism when he complains that, after reading some philosophy “from time to time,” he finds “some of it ... to be written in a jargon so impenetrable that I can only think that it aimed at impressing those who confound obscurity with profundity.” I’m confident that precisely the same unwarranted judgment could be made about any paper in fundamental physics, when read by someone who does not have the technical training necessary to read fundamental physics!

Weinberg complains about the fact that when he gives talks about the Big Bang someone in the audience regularly poses a “philosophical” question about the necessity of something existing before that moment, even though physics tells us that time itself started with the Big Bang, which means that the question of what was there before that pivotal event is meaningless. But a Nobel winner ought to understand the difference between a random member of the audience at a popular talk and the thinking of professional philosophers of physics. Indeed, Weinberg himself gives some credit to philosophers past when he points out that Augustine (in the 4th century, nonetheless) explicitly considered the same problem and “came to the conclusion that it is wrong to ask what there was before God created the universe, because God, who is outside time, created time along with the universe.” So, philosophers do get some things right after all, in this case a full millennium and a half before physicists.

It is interesting that Weinberg attempts to show how philosophy (of science) occasionally does help science itself, but only insofar as it frees scientists from other, bad, philosophy. His examples are illuminating as to where at least part of the problem with his analysis resides. He mentions, for instance, that logical positivism — a philosophical position that was current in the early part of the 20th century — “helped to free Einstein from the notion that there is an absolute sense to a statement that two events are simultaneous; he found that no measurement could provide a criterion for simultaneity that would give the same result for all observers. This concern with what can actually be observed is the essence of positivism.” Moreover, again according to Weinberg, positivism played a constructive role in the beginning of quantum mechanics: “The uncertainty principle, which is one of the foundations of the probabilistic interpretation of quantum mechanics, is based on Heisenberg’s positivistic analysis of the limitations we encounter when we set out to observe a particle’s position and momentum.”

But Weinberg then complains that the “aura” of positivism outlasted its value, and that its philosophical framework began to hinder research in fundamental physics. He endorses George Gale’s opinion that positivism is likely to blame for the current negative relationship between philosophers and physicists, going on to list examples of alleged damage, such as the resistance to atomism and the resulting delayed acceptance of statistical mechanics, as well as the late acceptance of the wave function as a physical reality. But even assuming that Weinberg’s take on the history of science is correct (after all, he is relying on anecdotal evidence, not on a professional, systematic historical analysis), there is a basic fallacy underlying his reasoning. He is imagining a static model of philosophy, whereby views do not change — much less progress — over time. But why should that be? Why is it acceptable that science abandons, say, Newtonian mechanics in favor of relativity theory, while philosophy cannot abandon logical positivism in favor of more sophisticated notions? Indeed, this is precisely what happened (Ladyman 2012). It is simply historically incorrect to claim that positivism may underlie the current disagreements between philosophers and physicists, because philosophers have not considered logical positivism a viable notion in philosophy of science since at the least the middle part of the 20th century, following the devastating critiques of Popper and others in the 1930s, and ending with those of Quine, Putnam and Kuhn in the 1960s.. If physicists still think that positivism commands the field in philosophy then it is the physicists who need to update their notions of where philosophy is. When Weinberg states that “it seems to me unlikely that the positivist attitude will be of much help in the future” he is absolutely right, but no philosopher of science would dispute that — or has done so for a number of decades.

At this point in Weinberg’s essay there is what appears to be a seamless transition, but is instead a logical gap that reveals much about some scientists’ misconceptions regarding philosophy. After having dispatched logical positivism, the author turns to attack “philosophical relativists,” by which he likely means some of the most extreme postmodernist and deconstructionist authors that played a role in the so-called “science wars” of the 1990s (Sokal and Bricmont 2003). I will tackle this particular episode in more detail below and then again in the next chapter, but it is worth remarking here that scientists such as physicist Alan Sokal have been joined en force in rebutting epistemic relativism by a number of philosophers, mostly, in fact, philosophers of science. What Weinberg does not appreciate is that philosophers of science are typically highly respectful of science and do come to its defense whenever this is needed (other classic examples include the debate over Intelligent Design (Pennock 1998) and discussions about pseudoscience (Pigliucci and Boudry 2013)).

Indeed, Weinberg himself could use some philosophical pointers when he struggles against the postmodern charge that science does not make progress. He says: “I cannot prove that science is like this [making progress], but everything in my experience as a scientist convinces me that it is. The ‘negotiations’ over changes in scientific theory go on and on, with scientists changing their minds again and again in response to calculations and experiments, until finally one view or another bears an unmistakable mark of objective success. It certainly feels to me that we are discovering something real in physics, something that is what it is without any regard to the social or historical conditions that allowed us to discover it.” What Weinberg cannot prove, what he has to resort to his gut feelings to argue for, is the bread and butter of the realism-antirealism debate in philosophy of science, to which we will turn in some depth later on, as one of the best illustrations of the idea underlying this book, that philosophy makes progress.

The second example of a scientist who misunderstands philosophy that I wish to discuss in order to build my case is another physicist, Lawrence Krauss. He first presented his thoughts on the matter in an interview with The Atlantic magazine conducted by journalist Ross Andersen (Andersen 2012). To put things in context, the discussion took off with a reference to Krauss’ book on cosmology for the general public, A Universe from Nothing: Why There is Something Rather Than Nothing, in which Krauss maintains that science has all but solved the old (philosophical) question of why there is a universe in the first place. The book was much praised shortly after publication, but later had been harshly criticized by David Albert in the New York Times. Here is Albert (2012) summarizing the gist of his criticism of Krauss, that the physicist played a bait and switch with his readers, substituting quantum fields for the “nothing” of the book’s title:

“The particular, eternally persisting, elementary physical stuff of the world, according to the standard presentations of relativistic quantum field theories, consists (unsurprisingly) of relativistic quantum fields ... They have nothing whatsoever to say on the subject of where those fields came from, or of why the world should have consisted of the particular kinds of fields it does, or of why it should have consisted of fields at all, or of why there should have been a world in the first place. Period. Case closed. End of story.”

That’s harsh, as much as I think it is on target, and Krauss understandably didn’t like Albert’s review. Still, I wonder if Krauss was justified in referring to Albert as a “moronic philosopher,” considering that the latter is not only a highly respected philosopher of physics at Columbia University, but also holds a PhD in theoretical physics. I didn’t think Rockefeller University (where Albert got his degree) gave out PhD’s to morons, but I could be wrong.

Nonetheless, let’s get to the core of Krauss’ attack on philosophy. He said: “Every time there’s a leap in physics, it encroaches on these areas that philosophers have carefully sequestered away to themselves, and so then you have this natural resentment on the part of philosophers.” This seems to show a couple of things: first, that Krauss does not appear to genuinely care to understand what the business of philosophy (especially philosophy of science) is, or he would have tried a bit harder; second, that he doesn’t mind playing armchair psychologist, despite the dearth of evidence for his pop psychological “explanation” of why philosophers allegedly do what they do.

Here is another gem: “Philosophy is a field that, unfortunately, reminds me of that old Woody Allen joke, ‘those that can’t do, teach; and those that can’t teach, teach gym.’ And the worst part of philosophy is the philosophy of science; the only people, as far as I can tell, that read work by philosophers of science are other philosophers of science. It has no impact on physics what so ever. ... They have every right to feel threatened, because science progresses and philosophy doesn’t.”

In response to which, I think, it would be fair to point out that the only people who read works in theoretical physics are theoretical physicists. More seriously, once again, the major aim of philosophy (of science, in particular) is not to solve scientific problems. To see how strange Krauss’ complaint is just think of what it would sound like if he had said that historians of physics haven’t solved a single puzzle in theoretical physics. That’s because historians do history, not science. And the reader will have noticed the jab at philosophy for not making progress, which is the underlying reason to discuss these statements in the present volume to begin with.

Andersen, at this point in the interview, must have been a bit fed up with Krauss’ ego, so he pointed out that actually philosophers have contributed to a number of science or science-related fields, and mentioned computer science and its intimate connection with logic. He even named Bertrand Russell as a pivotal figure in this context. Ah, responded Krauss, but really, logic is a branch of mathematics, so philosophy doesn’t get credit. And at any rate, Russell was a mathematician, according to Krauss, so he doesn’t count either. The cosmologist goes on to claim that Wittgenstein was “very mathematical,” as if it were somehow surprising to find philosophers who are conversant in logic and math.

Andersen, however, wasn’t moved and insisted: “Certainly philosophers like John Rawls have been immensely influential in fields like political science and public policy. Do you view those as legitimate achievements?” And here Krauss was forced to deliver one of the lamest responses I can recall in a long time: “Well, yeah, I mean, look I was being provocative, as I tend to do every now and then in order to get people’s attention.” This is a rather odd admission from someone who later on in the same interview claims that “if you’re writing for the public, the one thing you can’t do is overstate your claim, because people are going to believe you.”

Krauss also has a naively optimistic view of the business of science, as it turns out. For instance, he claims that “the difference [between scientists and philosophers] is that scientists are really happy when they get it wrong, because it means that there’s more to learn.” I’ve practiced science for a quarter century, and I’ve never seen anyone happy to be shown wrong, or who didn’t react as defensively (or even offensively) as possible to any suggestion that he might be wrong. Indeed, as physicist Max Plank famously put it, “science progresses funeral by funeral,” because often the old generation has to retire and die before new ideas really take hold. Scientists are just human beings, and like all human beings they are interested in mundane things like sex, fame and money (and yes, the pursuit of knowledge). Science is a wonderful and wonderfully successful activity, but there is no reason to try to make its practitioners into some species of intellectual saints that they certainly are not.

Finally, on the issue of whether Albert the “moronic” theoretical physicist-philosopher has a point in criticizing Krauss’ book, Andersen remarked: “It sounds like you’re arguing that ‘nothing’ is really a quantum vacuum, and that a quantum vacuum is unstable in such a way as to make the production of matter and space inevitable. But a quantum vacuum has properties. For one, it is subject to the equations of quantum field theory. Why should we think of it as nothing?” To which Krauss replied by engaging in what looks to me like a bit of handwaving: “I don’t think I argued that physics has definitively shown how something could come from nothing; physics has shown how plausible physical mechanisms might cause this to happen. ... I don’t really give a damn about what ‘nothing’ means to philosophers; I care about the ‘nothing’ of reality. And if the ‘nothing’ of reality is full of stuff, then I’ll go with that.” A nothing full of stuff? No wonder Albert wasn’t convinced.

But, insisted Andersen, “when I read the title of your book, I read it as ‘questions about origins are over.’” To which Krauss responded: “Well, if that hook gets you into the book that’s great. But in all seriousness, I never make that claim. ... If I’d just titled the book ‘A Marvelous Universe,’ not as many people would have been attracted to it.” Again, this from someone who had just lectured readers about honesty in communicating with the public. I think my case about Krauss can rest here, though interested readers are invited to check his half-hearted “apology” stemming from his exchange with Andersen and published in Scientific American (Krauss 2012), or is equally revealing interview in The Guardian with philosopher Julian Baggini (Baggini and Krauss 2012).

I do not wish to leave the reader with the impression that only some physicists display a dyspeptic reaction toward philosophy, a few life scientists do it too. Perhaps my favorite example is writer Sam Harris (2011), who — on the strength of his graduate research in neurobiology — wrote a provocative book entitled The Moral Landscape: How Science Can Determine Human Values. What Harris set out to do in that book was nothing less than to mount a science-based challenge to Hume’s classic separation of facts from values. For Harris, values are facts, and as such they are amenable to scientific inquiry.

Before I get to the meat, let me point out that I think Harris undermines his own project in two endnotes tucked in at the back of his book. In the second note to the Introduction, he acknowledges that he “do[es] not intend to make a hard distinction between ‘science’ and other intellectual contexts in which we discuss ‘facts.’” But if that is the case, if we can define “science” as any type of rational-empirical inquiry into “facts” (the scare quotes are his) then we are talking about something that is not at all what most readers are likely to understand when they pick up a book with a subtitle that says “How Science Can Determine Human Values.” One can reasonably smell a bait and switch here. Second, in the first footnote to chapter 1, Harris says: “Many of my critics fault me for not engaging more directly with the academic literature on moral philosophy ... [But] I am convinced that every appearance of terms like ‘metaethics,’ ‘deontology,’ ... directly increases the amount of boredom in the universe.” In other words, the whole of the only field other than religion that has ever dealt with ethics is dismissed by Sam Harris because he finds its terminology boring. Is that a fact or a value judgment, I wonder?

Broadly speaking, Harris wants to deliver moral decision making to science because he wants to defeat the evil (if oddly paired) twins of religious fanaticism and moral relativism. Despite the fact that I think he grossly overestimates the pervasiveness of the latter, we are together on this. Except of course that the best arguments against both positions are philosophical, not scientific. For instance, the most convincing reason why gods cannot possibly have anything to do with morality was presented 24 centuries ago by Plato (circa 399BCE / 2012), in his Euthyphro dialogue (which goes, predictably, entirely unmentioned in The Moral Landscape). In the dialogue, Socrates asks a young man named Euthyphro the following question: “The point which I should first wish to understand is whether the pious or holy is beloved by the gods because it is holy, or holy because it is beloved of the gods?” That is, does God embrace moral principles naturally occurring and external to Him because they are sound (“holy”) or are these moral principles sound because He endorses them? It cannot be both, and the choice is not pretty, since the two horns lead to either a concept of (divine, in this instance) might makes right or to the conclusion that moral principles are independent of gods, which means we can potentially access them without the intermediacy of the divine. As for moral relativism, it has been the focus of sustained and devastating attacks in philosophy, for instance by thinkers such as Peter Singer and Simon Blackburn, but of course in order to be aware of that one would have to read precisely the kind of metaethical literature that Harris finds so increases the degree of boredom in the universe.

Ultimately, Harris really wants science — and particularly neuroscience (which just happens to be his own specialty) — to help us out of our moral quandaries. Except that the reader will await in vain throughout the book to find a single example of new moral insights that science provides us with. For instance, Harris tells us that genital mutilation of young girls is wrong. I agree, but certainly we have no need of fMRI scans to tell us why: the fact that certain specific regions of the brain are involved in pain and suffering, and that we might be able to measure exactly the intensity of those emotions doesn’t add anything at all to the conclusion that genital mutilation is wrong because it violates an individual’s right to physical integrity and to avoid pain unless absolutely necessary (e.g., during a surgical operation to save her life, if no anesthetic is available).

Indeed, Harris’ insistence on neurobiology becomes at times positively creepy (a sure mark of scientism since at the least the eugenic era: Adams 1990), as in the section where he seems to relish the prospect of a neuro-scanning technology that will be able to tell us if anyone is lying, opening the prospect of a world where government (and corporations) will be able to enforce “no-lie zones” upon us. He writes: “Thereafter, civilized men and women might share a common presumption: that whenever important conversations are held, the truthfulness of all participants will be monitored. ... Many of us might no more feel deprived of the freedom to lie during a job interview or at a press conference than we currently feel deprived of the freedom to remove our pants in the supermarket.” I don’t know about you, but for me these sentences conjure the specter of a really, really scary Big Brother, which I most definitely would rather avoid, science be damned (on the dangers of too much utilitarianism, see: Thomas et al. 2011).

At several points in the book Harris seems to think that neurobiology will be so important for ethics that we will be able to tell whether people are happy by scanning them and make sure their pleasure centers are activated. He goes so far as arguing that scientific research shows that we are wrong about what makes us happy, and that it is conceivable that “evil” (quite a metaphysically loaded term, for a book that shies away from philosophy) might turn out to be one of many paths to happiness — meaning the stimulation of certain neural pathways in our brains. Besides the obvious point that if what we want to do is stimulate our brains so that we feel perennially “happy” then all we need are appropriate drugs to be injected into our veins while we sit in a pod in perfectly imbecilic contentment (see Nozick’s (1974, 644-646) famous experience machine thought experiment), these are all excellent observations that ironically deny that science, by itself, can answer moral questions. As Harris points out, for instance, research shows that people become less happy when they have children. What does this scientific fact about human behavior have to do with ethical decisions concerning whether and when to have children? [4]

Moreover, as we saw, Harris entirely evades philosophical criticism of his positions on the simple ground that he finds metaethics “boring.” But he is a self-professed consequentialist who simply ducks any discussion of the implications of that a priori choice of ethical framework, a choice that informs his entire view of what counts for morality, happiness, well-being and so forth. He seems unaware of (or doesn’t care about) the serious philosophical objections that have been raised against consequentialism, and even less so of the various counter-moves in conceptual space that consequentialists have made to defend their position (we will explore some of this territory). This ignorance is not bliss, and it is the high price Harris’ readers pay for the crucial evasive maneuvers that the author sneaks into the initial footnotes I mentioned above.

Now, what are we to make of all of the above? I am not particularly interested in simply showing how philosophically naive Weinberg, Krauss, Harris or several others (the list is surprisingly long, and getting longer) are, as much fun as that sometimes is. Rather, I want to ask the broader question of what underlies these scientists’ take on science and philosophy. I think it is fair to say that the above criticisms of philosophy are built on the following implicit argument:

Premise 1: Empirical evidence is the province of science (and only science).

Premise 2: All meaningful / answerable questions are by nature empirical.

Premise 3: Philosophy does not deal with empirical questions.

Conclusion: Therefore, science is the only activity that provides meaningful / answerable questions.

Corollary: Philosophy is useless.